Discovering Multi-Hardware Mobile Models via Architecture Search

Paper and Code

Aug 18, 2020

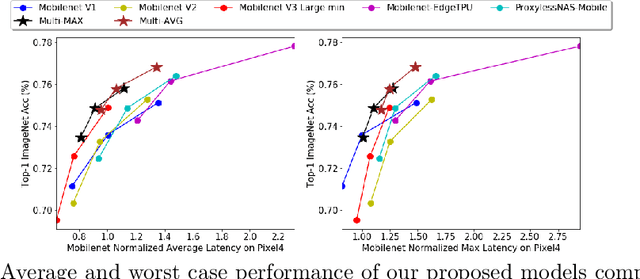

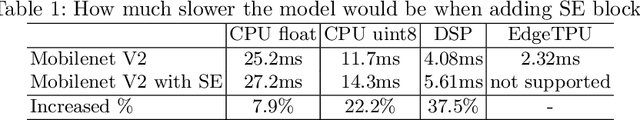

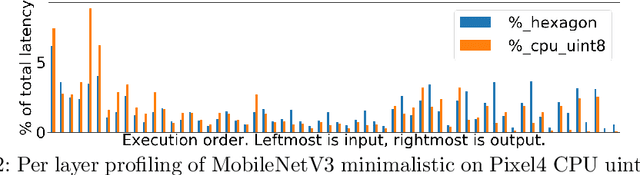

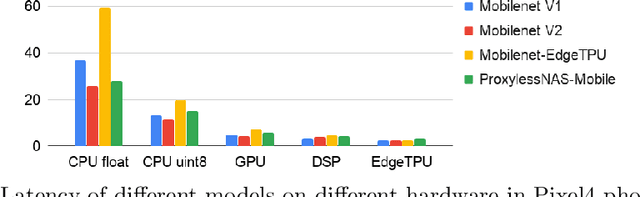

Developing efficient models for mobile phones or other on-device deployments has been a popular topic in both industry and academia. In such scenarios, it is often convenient to deploy the same model on a diverse set of hardware devices owned by different end users to minimize the costs of development, deployment and maintenance. Despite the importance, designing a single neural network that can perform well on multiple devices is difficult as each device has its own specialty and restrictions: A model optimized for one device may not perform well on another. While most existing work proposes different models optimized for each single hardware, this paper is the first which explores the problem of finding a single model that performs well on multiple hardware. Specifically, we leverage architecture search to help us find the best model, where given a set of diverse hardware to optimize for, we first introduce a multi-hardware search space that is compatible with all examined hardware. Then, to measure the performance of a neural network over multiple hardware, we propose metrics that can characterize the overall latency performance in an average case and worst case scenario. With the multi-hardware search space and new metrics applied to Pixel4 CPU, GPU, DSP and EdgeTPU, we found models that perform on par or better than state-of-the-art (SOTA) models on each of our target accelerators and generalize well on many un-targeted hardware. Comparing with single-hardware searches, multi-hardware search gives a better trade-off between computation cost and model performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge