DIGEST: Deeply supervIsed knowledGE tranSfer neTwork learning for brain tumor segmentation with incomplete multi-modal MRI scans

Paper and Code

Nov 15, 2022

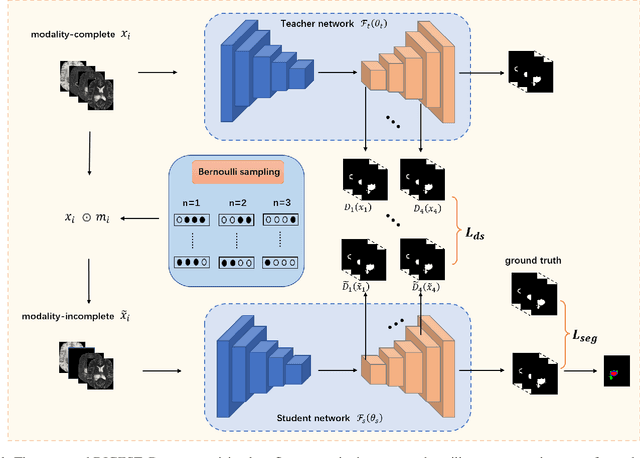

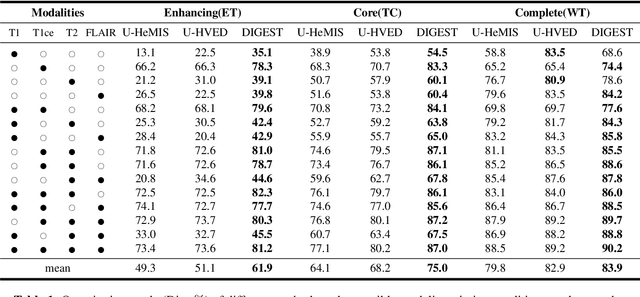

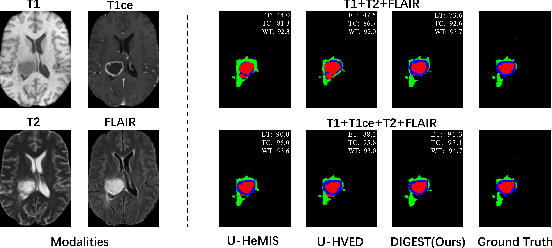

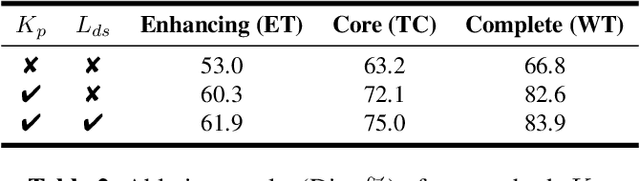

Brain tumor segmentation based on multi-modal magnetic resonance imaging (MRI) plays a pivotal role in assisting brain cancer diagnosis, treatment, and postoperative evaluations. Despite the achieved inspiring performance by existing automatic segmentation methods, multi-modal MRI data are still unavailable in real-world clinical applications due to quite a few uncontrollable factors (e.g. different imaging protocols, data corruption, and patient condition limitations), which lead to a large performance drop during practical applications. In this work, we propose a Deeply supervIsed knowledGE tranSfer neTwork (DIGEST), which achieves accurate brain tumor segmentation under different modality-missing scenarios. Specifically, a knowledge transfer learning frame is constructed, enabling a student model to learn modality-shared semantic information from a teacher model pretrained with the complete multi-modal MRI data. To simulate all the possible modality-missing conditions under the given multi-modal data, we generate incomplete multi-modal MRI samples based on Bernoulli sampling. Finally, a deeply supervised knowledge transfer loss is designed to ensure the consistency of the teacher-student structure at different decoding stages, which helps the extraction of inherent and effective modality representations. Experiments on the BraTS 2020 dataset demonstrate that our method achieves promising results for the incomplete multi-modal MR image segmentation task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge