Diagnostic Curves for Black Box Models

Paper and Code

Dec 02, 2019

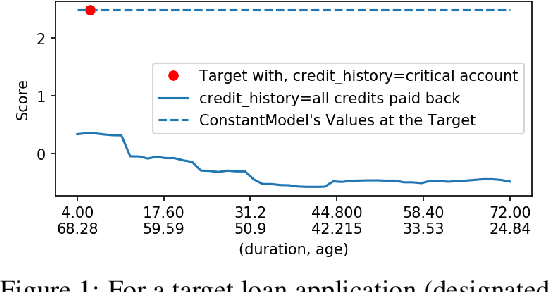

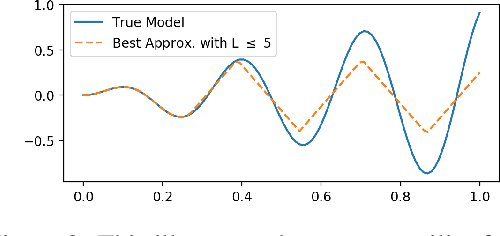

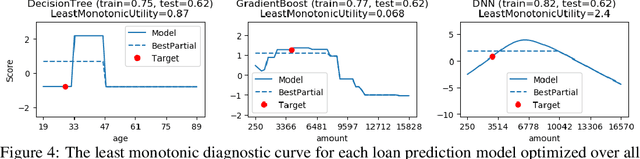

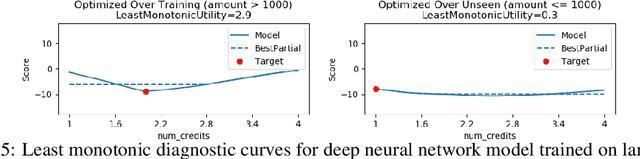

In safety-critical applications of machine learning, it is often necessary to look beyond standard metrics such as test accuracy in order to validate various qualitative properties such as monotonicity with respect to a feature or combination of features, checking for undesirable changes or oscillations in the response, and differences in outcomes (e.g. discrimination) for a protected class. To help answer this need, we propose a framework for approximately validating (or invalidating) various properties of a black box model by finding a univariate diagnostic curve in the input space whose output maximally violates a given property. These diagnostic curves show the exact value of the model along the curve and can be displayed with a simple and intuitive line graph. We demonstrate the usefulness of these diagnostic curves across multiple use-cases and datasets including selecting between two models and understanding out-of-sample behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge