DeepEMplanner: An End-to-End EM Motion Planner with Iterative Interactions

Paper and Code

Nov 29, 2023

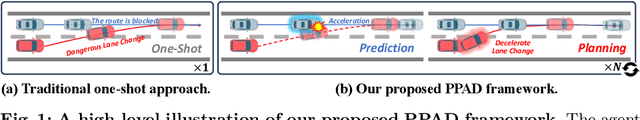

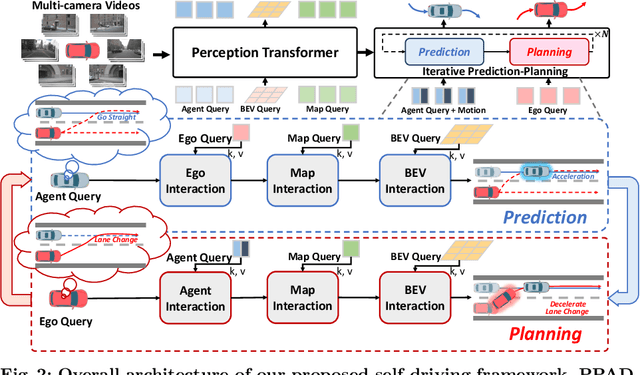

Motion planning is a computational problem that finds a sequence of valid trajectories, often based on surrounding agents' forecasting, environmental understanding, and historical and future contexts. It can also be viewed as a game in which agents continuously plan their next move according to other agents' intentions and the encountering environment, further achieving their ultimate goals through incremental actions. To model the dynamic planning and interaction process, we propose a novel framework, DeepEMplanner, which takes the stepwise interaction into account for fine-grained behavior learning. The ego vehicle maximizes each step motion to reach its eventual driving outcome based on the stepwise expectation from agents and its upcoming road conditions. On the other hand, the agents also follow the same philosophy to maximize their stepwise behavior under the encountering environment and the expectations from ego and other agents. Our DeepEMplanner models the interactions among ego, agents, and the dynamic environment in an autoregressive manner by interleaving the Expectation and Maximization processes. Further, we design ego-to-agents, ego-to-map, and ego-to-BEV interaction mechanisms with hierarchical dynamic key objects attention to better model the interactions. Experiments on the nuScenes benchmark show that our approach achieves state-of-the-art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge