Deep Residual Learning for Instrument Segmentation in Robotic Surgery

Paper and Code

Mar 24, 2017

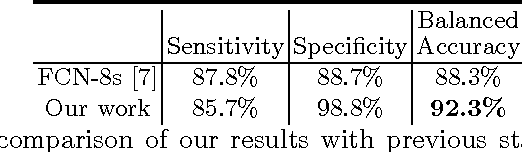

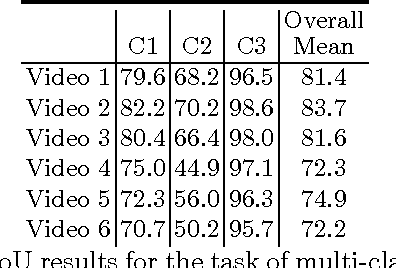

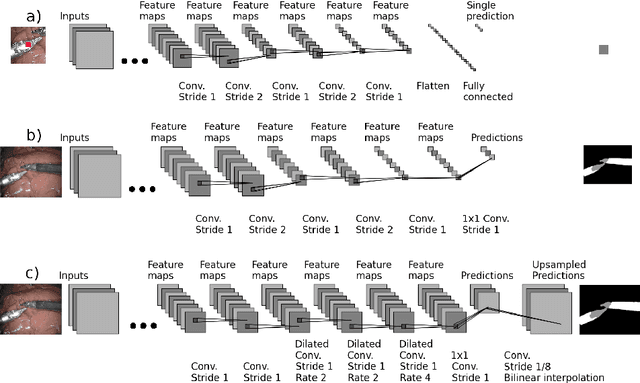

Detection, tracking, and pose estimation of surgical instruments are crucial tasks for computer assistance during minimally invasive robotic surgery. In the majority of cases, the first step is the automatic segmentation of surgical tools. Prior work has focused on binary segmentation, where the objective is to label every pixel in an image as tool or background. We improve upon previous work in two major ways. First, we leverage recent techniques such as deep residual learning and dilated convolutions to advance binary-segmentation performance. Second, we extend the approach to multi-class segmentation, which lets us segment different parts of the tool, in addition to background. We demonstrate the performance of this method on the MICCAI Endoscopic Vision Challenge Robotic Instruments dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge