Deep Action- and Context-Aware Sequence Learning for Activity Recognition and Anticipation

Paper and Code

Nov 18, 2016

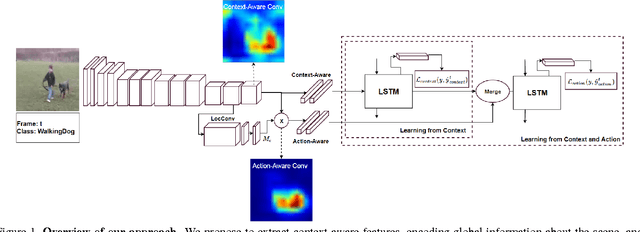

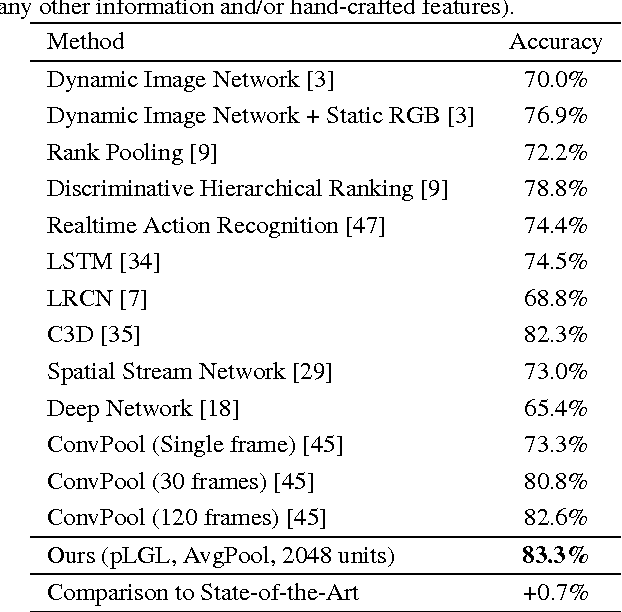

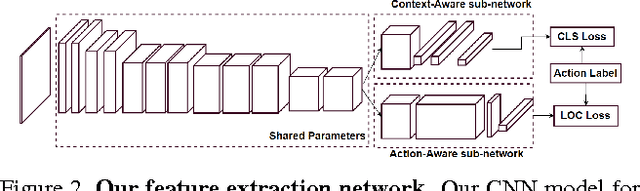

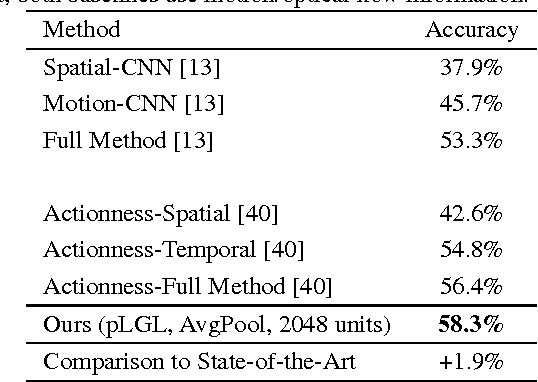

Action recognition and anticipation are key to the success of many computer vision applications. Existing methods can roughly be grouped into those that extract global, context-aware representations of the entire image or sequence, and those that aim at focusing on the regions where the action occurs. While the former may suffer from the fact that context is not always reliable, the latter completely ignore this source of information, which can nonetheless be helpful in many situations. In this paper, we aim at making the best of both worlds by developing an approach that leverages both context-aware and action-aware features. At the core of our method lies a novel multi-stage recurrent architecture that allows us to effectively combine these two sources of information throughout a video. This architecture first exploits the global, context-aware features, and merges the resulting representation with the localized, action-aware ones. Our experiments on standard datasets evidence the benefits of our approach over methods that use each information type separately. We outperform the state-of-the-art methods that, as us, rely only on RGB frames as input for both action recognition and anticipation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge