DECSTR: Learning Goal-Directed Abstract Behaviors using Pre-Verbal Spatial Predicates in Intrinsically Motivated Agents

Paper and Code

Jun 12, 2020

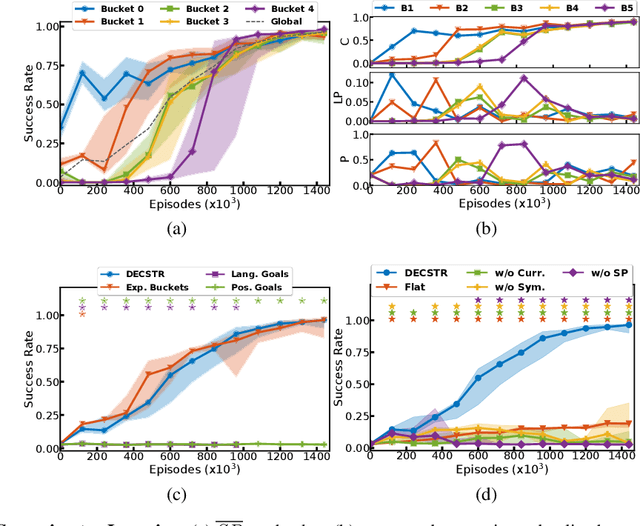

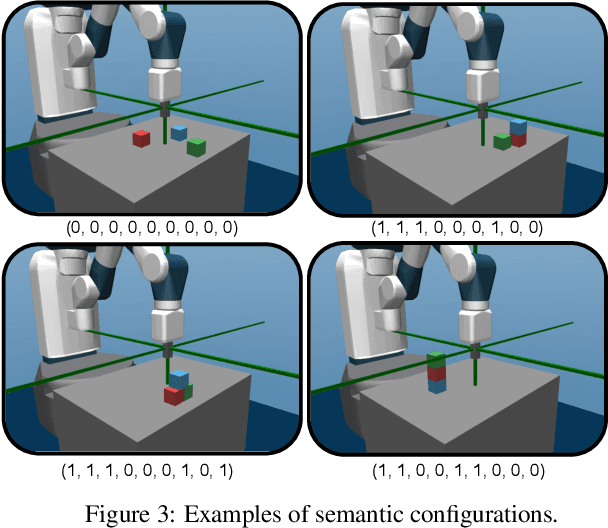

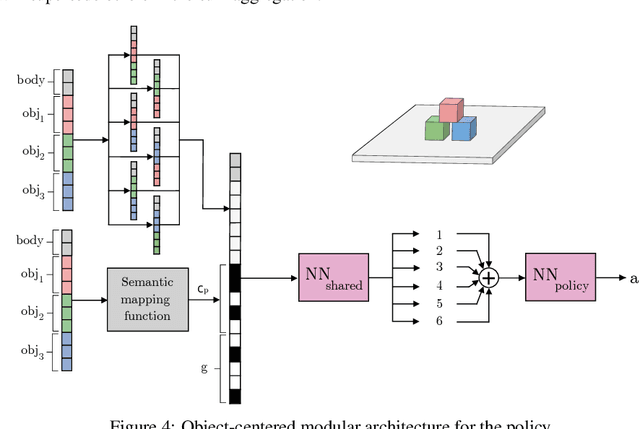

Intrinsically motivated agents freely explore their environment and set their own goals. Such goals are traditionally represented as specific states, but recent works introduced the use of language to facilitate abstraction. Language can, for example, represent goals as sets of general properties that surrounding objects should verify. However, language-conditioned agents are trained simultaneously to understand language and to act, which seems to contrast with how children learn: infants demonstrate goal-oriented behaviors and abstract spatial concepts very early in their development, before language mastery. Guided by these findings from developmental psychology, we introduce a high-level state representation based on natural semantic predicates that describe spatial relations between objects and that are known to be present early in infants. In a robotic manipulation environment, our DECSTR system explores this representation space by manipulating objects, and efficiently learns to achieve any reachable configuration within it. It does so by leveraging an object-centered modular architecture, a symmetry inductive bias, and a new form of automatic curriculum learning for goal selection and policy learning. As with children, language acquisition takes place in a second phase, independently from goal-oriented sensorimotor learning. This is done via a new goal generation module, conditioned on instructions describing expected transformations in object relations. We present ablations studies for each component and highlight several advantages of targeting abstract goals over specific ones. We further show that using this intermediate representation enables efficient language grounding by evaluating agents on sequences of language instructions and their logical combinations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge