Decomposing Word Embedding with the Capsule Network

Paper and Code

Apr 07, 2020

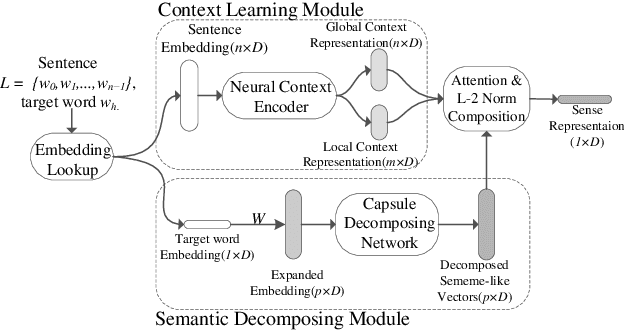

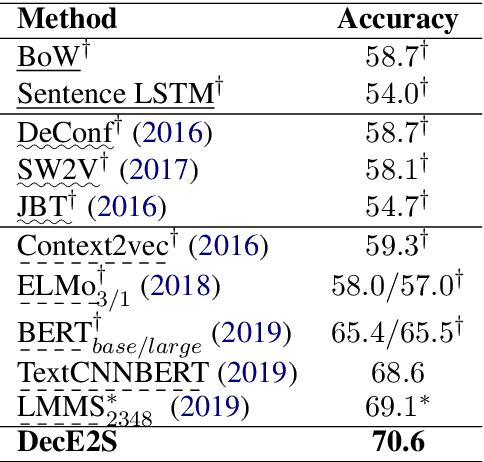

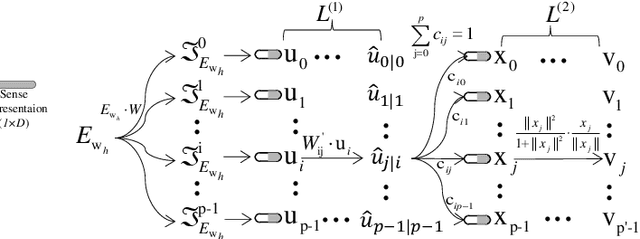

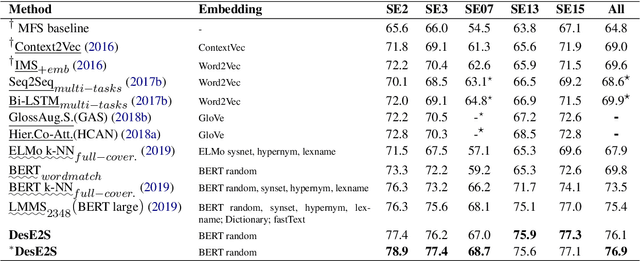

Multi-sense word embeddings have been promising solutions for word sense learning. Nevertheless, building large-scale training corpus and learning appropriate word sense are still open issues. In this paper, we propose a method for Decomposing the word Embedding into context-specific Sense representation, called DecE2S. First, the unsupervised polysemy embedding is fed into capsule network to produce its multiple sememe-like vectors. Second, with attention operations, DecE2S integrates the word context to represent the context-specific sense vector. To train DecE2S, we design a word matching training method for learning the context-specific sense representation. DecE2S was experimentally evaluated on two sense learning tasks, i.e., word in context and word sense disambiguation. Results on two public corpora Word-in-Context and English all-words Word Sense Disambiguation show that, the DesE2S model achieves the new state-of-the-art for the word in context and word sense disambiguation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge