Decentralized Visual-Inertial-UWB Fusion for Relative State Estimation of Aerial Swarm

Paper and Code

Mar 11, 2020

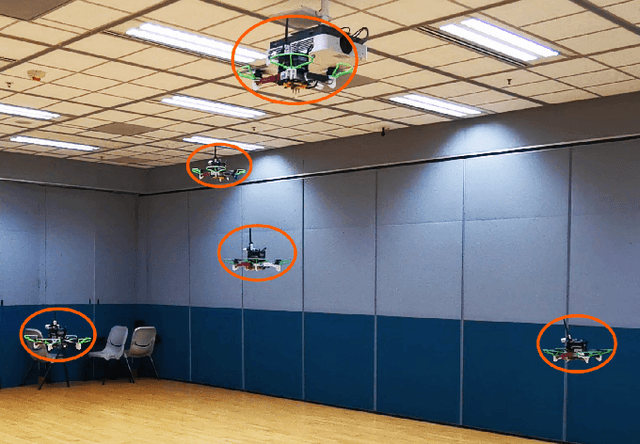

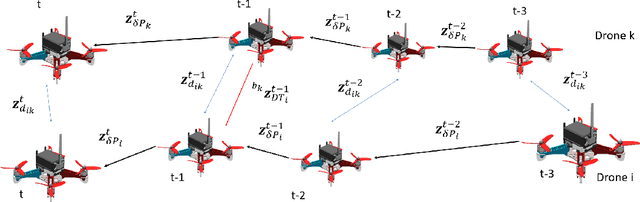

The collaboration of unmanned aerial vehicles (UAVs) has become a popular research topic for its practicability in multiple scenarios. The collaboration of multiple UAVs, which is also known as aerial swarm is a highly complex system, which still lacks a state-of-art decentralized relative state estimation method. In this paper, we present a novel fully decentralized visual-inertial-UWB fusion framework for relative state estimation and demonstrate the practicability by performing extensive aerial swarm flight experiments. The comparison result with ground truth data from the motion capture system shows the centimeter-level precision which outperforms all the Ultra-WideBand (UWB) and even vision based method. The system is not limited by the field of view (FoV) of the camera or Global Positioning System (GPS), meanwhile on account of its estimation consistency, we believe that the proposed relative state estimation framework has the potential to be prevalently adopted by aerial swarm applications in different scenarios in multiple scales.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge