Data Poisoning Attacks on EEG Signal-based Risk Assessment Systems

Paper and Code

Feb 08, 2023

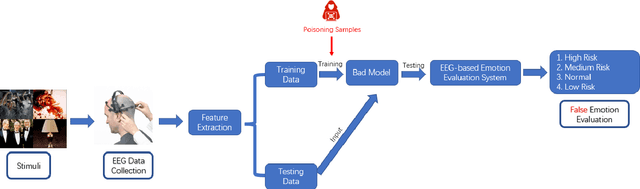

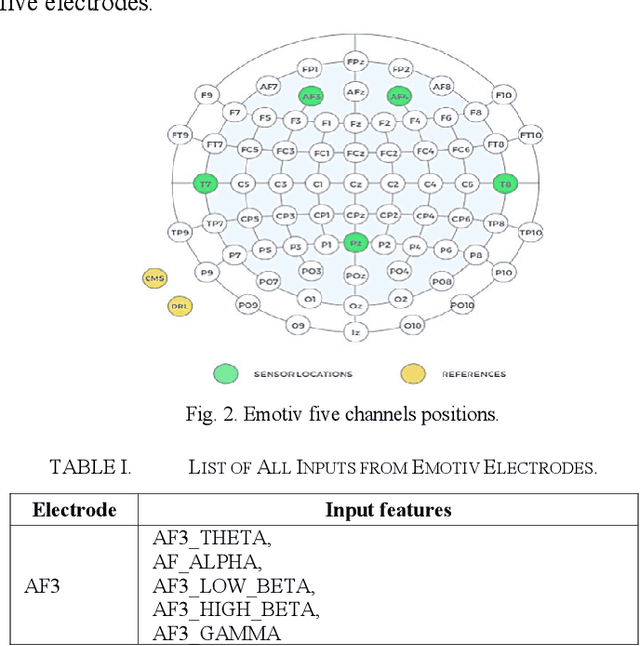

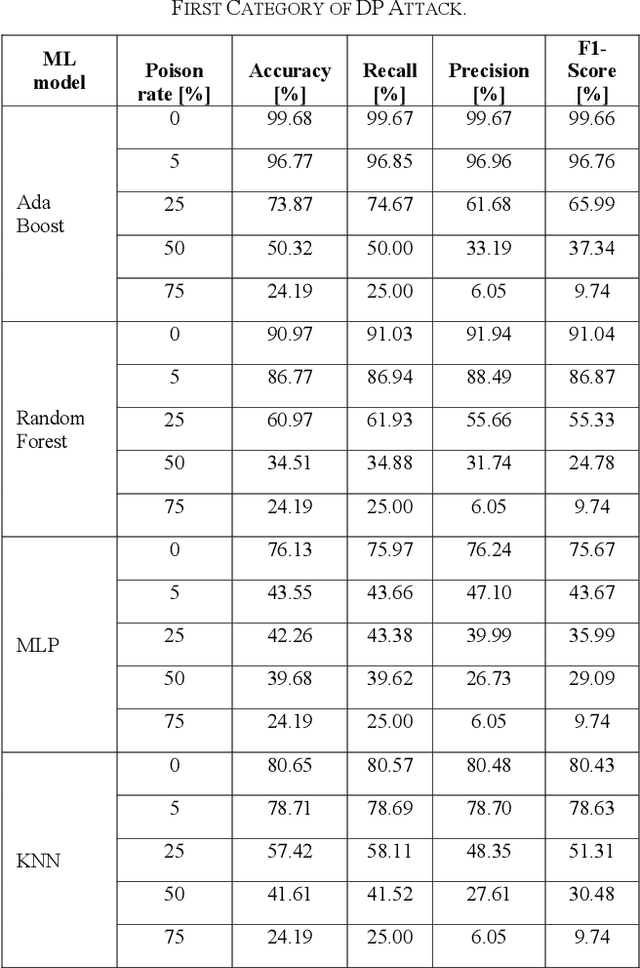

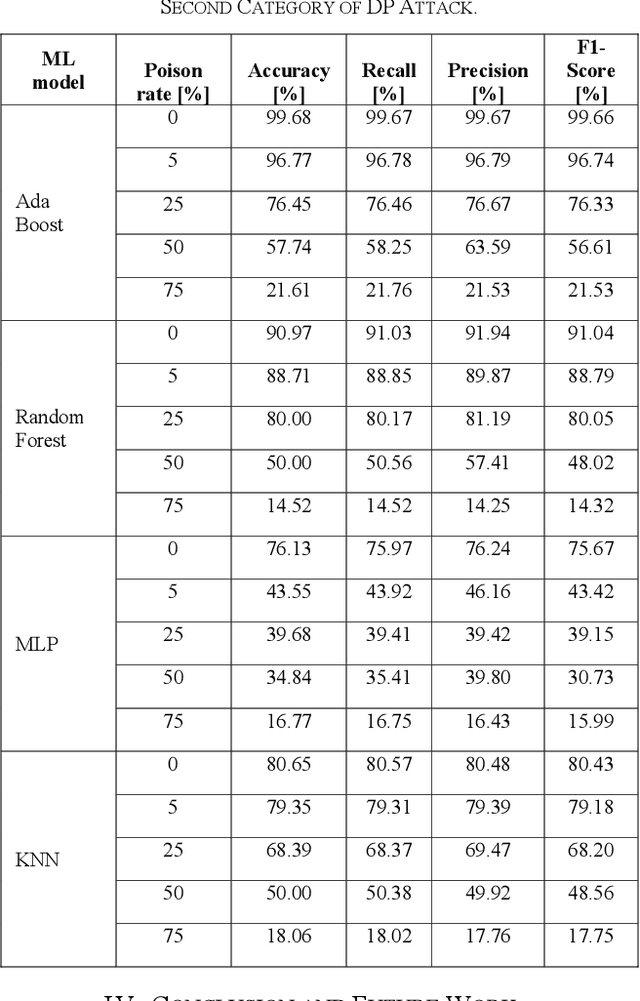

Industrial insider risk assessment using electroencephalogram (EEG) signals has consistently attracted a lot of research attention. However, EEG signal-based risk assessment systems, which could evaluate the emotional states of humans, have shown several vulnerabilities to data poison attacks. In this paper, from the attackers' perspective, data poison attacks involving label-flipping occurring in the training stages of different machine learning models intrude on the EEG signal-based risk assessment systems using these machine learning models. This paper aims to propose two categories of label-flipping methods to attack different machine learning classifiers including Adaptive Boosting (AdaBoost), Multilayer Perceptron (MLP), Random Forest, and K-Nearest Neighbors (KNN) dedicated to the classification of 4 different human emotions using EEG signals. This aims to degrade the performance of the aforementioned machine learning models concerning the classification task. The experimental results show that the proposed data poison attacks are model-agnostically effective whereas different models have different resilience to the data poison attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge