Data-driven generation of plausible tissue geometries for realistic photoacoustic image synthesis

Paper and Code

Mar 29, 2021

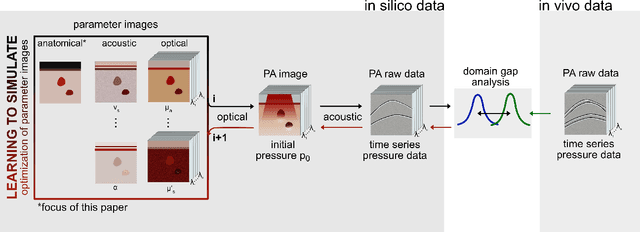

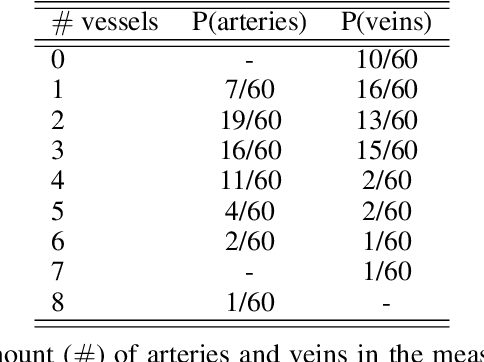

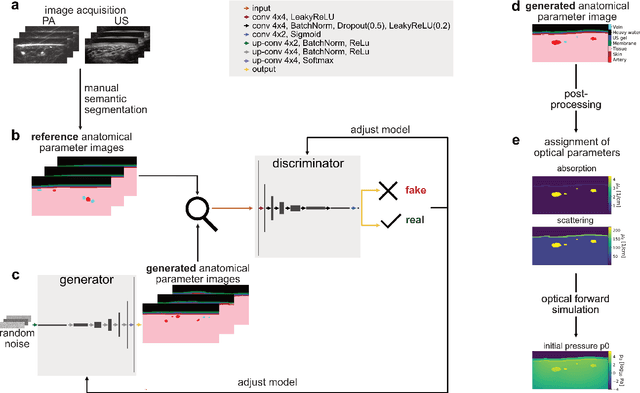

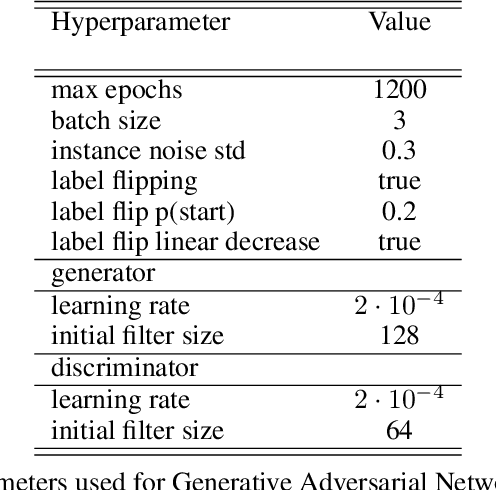

Photoacoustic tomography (PAT) has the potential to recover morphological and functional tissue properties such as blood oxygenation with high spatial resolution and in an interventional setting. However, decades of research invested in solving the inverse problem of recovering clinically relevant tissue properties from spectral measurements have failed to produce solutions that can quantify tissue parameters robustly in a clinical setting. Previous attempts to address the limitations of model-based approaches with machine learning were hampered by the absence of labeled reference data needed for supervised algorithm training. While this bottleneck has been tackled by simulating training data, the domain gap between real and simulated images remains a huge unsolved challenge. As a first step to address this bottleneck, we propose a novel approach to PAT data simulation, which we refer to as "learning to simulate". Our approach involves subdividing the challenge of generating plausible simulations into two disjoint problems: (1) Probabilistic generation of realistic tissue morphology, represented by semantic segmentation maps and (2) pixel-wise assignment of corresponding optical and acoustic properties. In the present work, we focus on the first challenge. Specifically, we leverage the concept of Generative Adversarial Networks (GANs) trained on semantically annotated medical imaging data to generate plausible tissue geometries. According to an initial in silico feasibility study our approach is well-suited for contributing to realistic PAT image synthesis and could thus become a fundamental step for deep learning-based quantitative PAT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge