DAPPER: Performance Estimation of Domain Adaptation in Mobile Sensing

Paper and Code

Nov 22, 2021

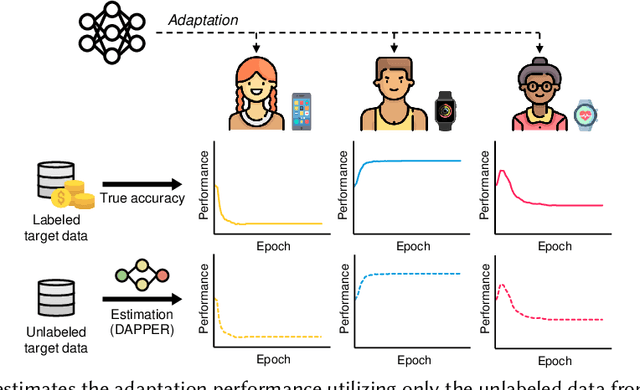

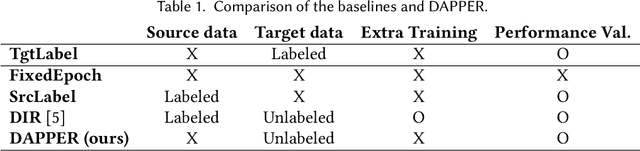

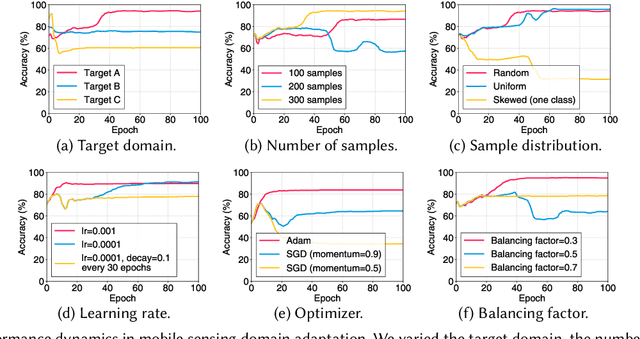

Many applications that utilize sensors in mobile devices and apply machine learning to provide novel services have emerged. However, various factors such as different users, devices, environments, and hyperparameters, affect the performance for such applications, thus making the domain shift (i.e., distribution shift of a target user from the training source dataset) an important problem. Although recent domain adaptation techniques attempt to solve this problem, the complex interplay between the diverse factors often limits their effectiveness. We argue that accurately estimating the performance in untrained domains could significantly reduce performance uncertainty. We present DAPPER (Domain AdaPtation Performance EstimatoR) that estimates the adaptation performance in a target domain with only unlabeled target data. Our intuition is that the outputs of a model on the target data provide clues for the model's actual performance in the target domain. DAPPER does not require expensive labeling costs nor involve additional training after deployment. Our evaluation with four real-world sensing datasets compared against four baselines shows that DAPPER outperforms the baselines by on average 17% in estimation accuracy. Moreover, our on-device experiment shows that DAPPER achieves up to 216X less computation overhead compared with the baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge