Cross-Domain Reasoning via Template Filling

Paper and Code

Oct 31, 2021

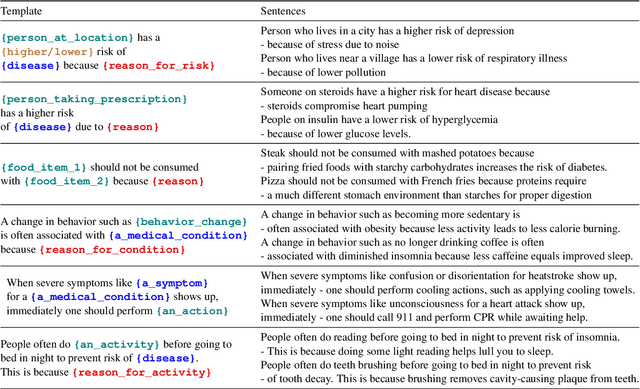

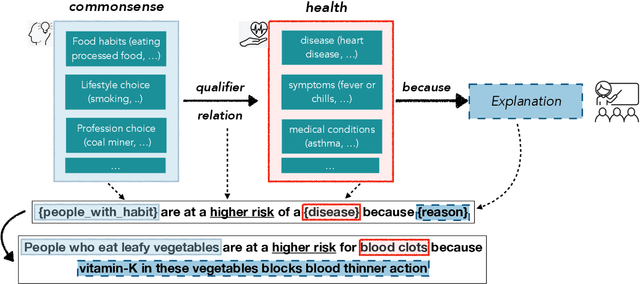

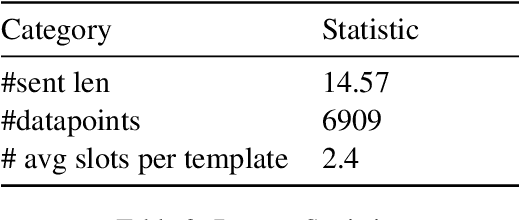

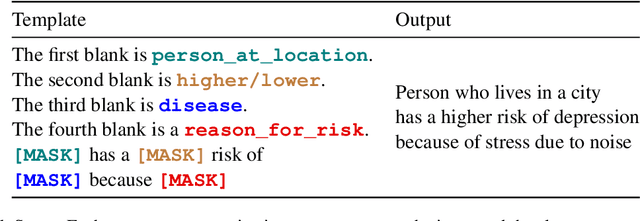

In this paper, we explore the ability of sequence to sequence models to perform cross-domain reasoning. Towards this, we present a prompt-template-filling approach to enable sequence to sequence models to perform cross-domain reasoning. We also present a case-study with commonsense and health and well-being domains, where we study how prompt-template-filling enables pretrained sequence to sequence models across domains. Our experiments across several pretrained encoder-decoder models show that cross-domain reasoning is challenging for current models. We also show an in-depth error analysis and avenues for future research for reasoning across domains

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge