Controller Synthesis for Omega-Regular and Steady-State Specifications

Paper and Code

Jun 05, 2021

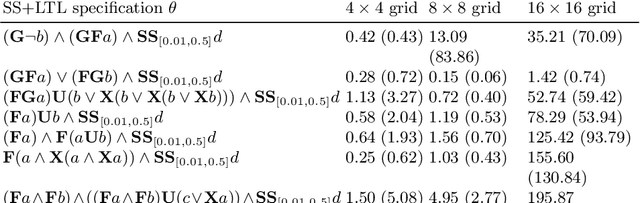

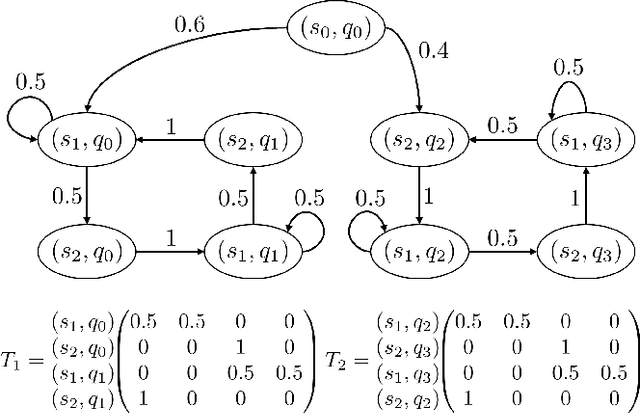

Given a Markov decision process (MDP) and a linear-time ($\omega$-regular or LTL) specification, the controller synthesis problem aims to compute the optimal policy that satisfies the specification. More recently, problems that reason over the asymptotic behavior of systems have been proposed through the lens of steady-state planning. This entails finding a control policy for an MDP such that the Markov chain induced by the solution policy satisfies a given set of constraints on its steady-state distribution. This paper studies a generalization of the controller synthesis problem for a linear-time specification under steady-state constraints on the asymptotic behavior. We present an algorithm to find a deterministic policy satisfying $\omega$-regular and steady-state constraints by characterizing the solutions as an integer linear program, and experimentally evaluate our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge