Controllable Person Image Synthesis with Spatially-Adaptive Warped Normalization

Paper and Code

Jun 03, 2021

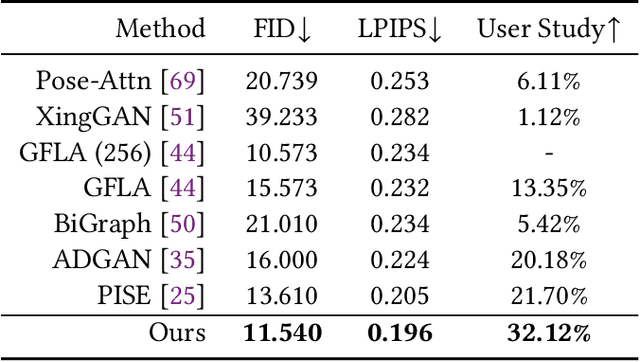

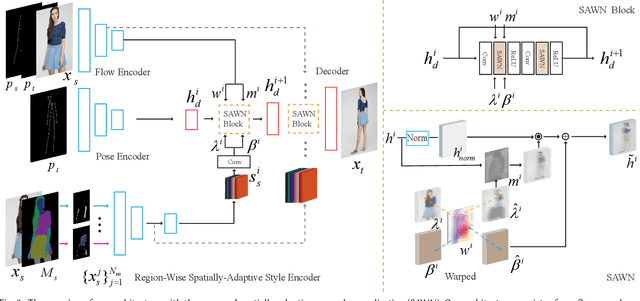

Controllable person image generation aims to produce realistic human images with desirable attributes (e.g., the given pose, cloth textures or hair style). However, the large spatial misalignment between the source and target images makes the standard architectures for image-to-image translation not suitable for this task. Most of the state-of-the-art architectures avoid the alignment step during the generation, which causes many artifacts, especially for person images with complex textures. To solve this problem, we introduce a novel Spatially-Adaptive Warped Normalization (SAWN), which integrates a learned flow-field to warp modulation parameters. This allows us to align person spatial-adaptive styles with pose features efficiently. Moreover, we propose a novel self-training part replacement strategy to refine the pretrained model for the texture-transfer task, significantly improving the quality of the generated cloth and the preservation ability of irrelevant regions. Our experimental results on the widely used DeepFashion dataset demonstrate a significant improvement of the proposed method over the state-of-the-art methods on both pose-transfer and texture-transfer tasks. The source code is available at https://github.com/zhangqianhui/Sawn.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge