Continually Updating Generative Retrieval on Dynamic Corpora

Paper and Code

May 27, 2023

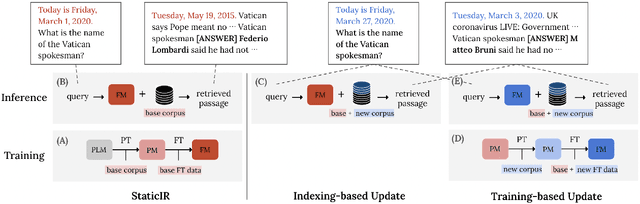

Generative retrieval has recently been gaining a lot of attention from the research community for its simplicity, high performance, and the ability to fully leverage the power of deep autoregressive models. However, prior work on generative retrieval has mostly investigated on static benchmarks, while realistic retrieval applications often involve dynamic environments where knowledge is temporal and accumulated over time. In this paper, we introduce a new benchmark called STREAMINGIR, dedicated to quantifying the generalizability of retrieval methods to dynamically changing corpora derived from StreamingQA, that simulates realistic retrieval use cases. On this benchmark, we conduct an in-depth comparative evaluation of bi-encoder and generative retrieval in terms of performance as well as efficiency under varying degree of supervision. Our results suggest that generative retrieval shows (1) detrimental performance when only supervised data is used for fine-tuning, (2) superior performance over bi-encoders when only unsupervised data is available, and (3) lower performance to bi-encoders when both unsupervised and supervised data is used due to catastrophic forgetting; nevertheless, we show that parameter-efficient measures can effectively mitigate the issue and result in competitive performance and efficiency with respect to the bi-encoder baseline. Our results open up a new potential for generative retrieval in practical dynamic environments. Our work will be open-sourced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge