Complex-Valued Neural Networks for Privacy Protection

Paper and Code

Jan 28, 2019

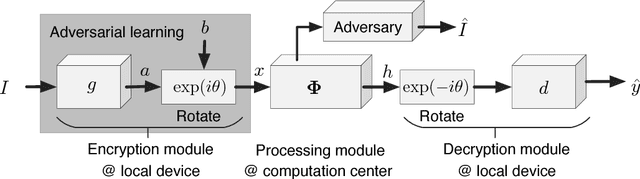

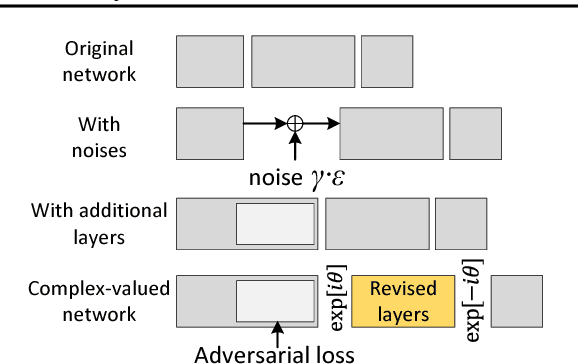

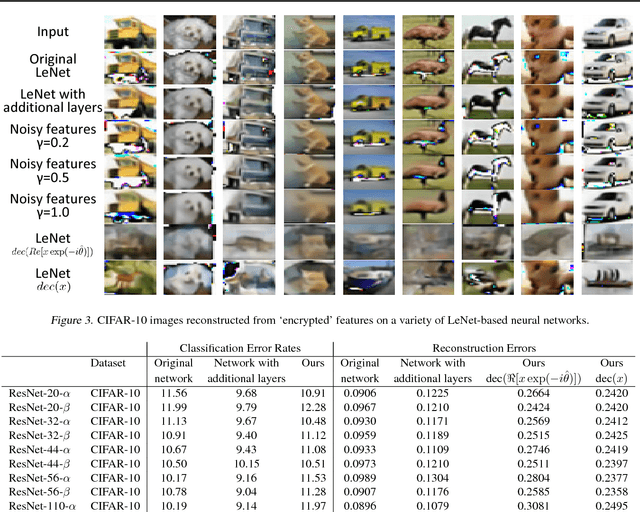

This paper proposes a generic method to revise traditional neural networks for privacy protection. Our method is designed to prevent inversion attacks, i.e., avoiding recovering private information from intermediate-layer features of a neural network. Our method transforms real-valued features of an intermediate layer into complex-valued features, in which private information is hidden in a random phase of the transformed features. To prevent the adversary from recovering the phase, we adopt an adversarial-learning algorithm to generate the complex-valued feature. More crucially, the transformed feature can be directly processed by the deep neural network, but without knowing the true phase, people cannot recover either the input information or the prediction result. Preliminary experiments with various neural networks (including the LeNet, the VGG, and residual networks) on different datasets have shown that our method can successfully defend feature inversion attacks while preserving learning accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge