Comparison of Multiple Features and Modeling Methods for Text-dependent Speaker Verification

Paper and Code

Sep 09, 2017

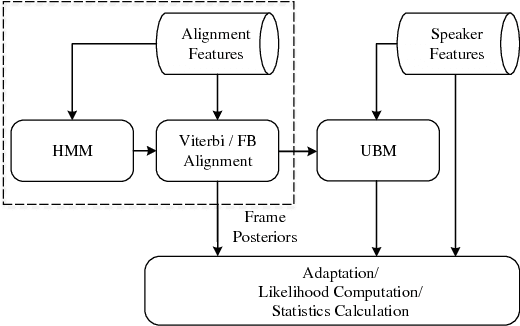

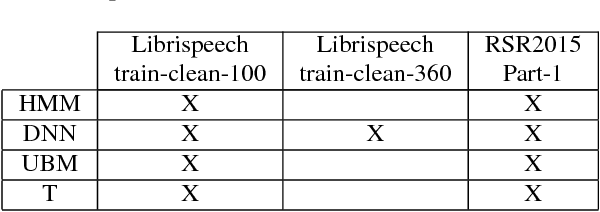

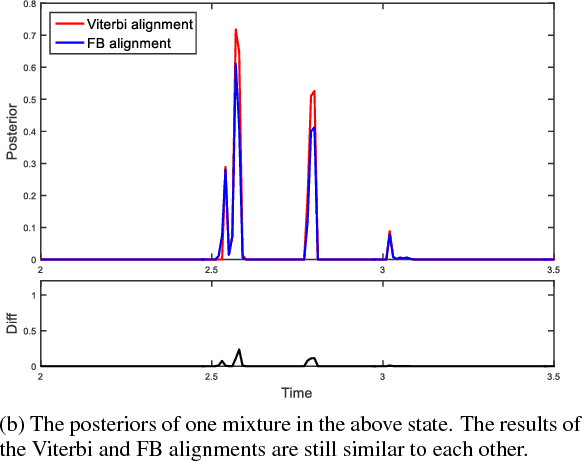

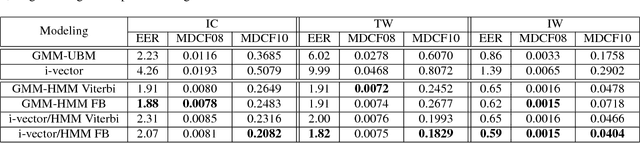

Text-dependent speaker verification is becoming popular in the speaker recognition society. However, the conventional i-vector framework which has been successful for speaker identification and other similar tasks works relatively poorly in this task. Researchers have proposed several new methods to improve performance, but it is still unclear that which model is the best choice, especially when the pass-phrases are prompted during enrollment and test. In this paper, we introduce four modeling methods and compare their performance on the newly published RedDots dataset. To further explore the influence of different frame alignments, Viterbi and forward-backward algorithms are both used in the HMM-based models. Several bottleneck features are also investigated. Our experiments show that, by explicitly modeling the lexical content, the HMM-based modeling achieves good results in the fixed-phrase condition. In the prompted-phrase condition, GMM-HMM and i-vector/HMM are not as successful. In both conditions, the forward-backward algorithm brings more benefits to the i-vector/HMM system. Additionally, we also find that even though bottleneck features perform well for text-independent speaker verification, they do not outperform MFCCs on the most challenging Imposter-Correct trials on RedDots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge