Combining learned skills and reinforcement learning for robotic manipulations

Paper and Code

Aug 02, 2019

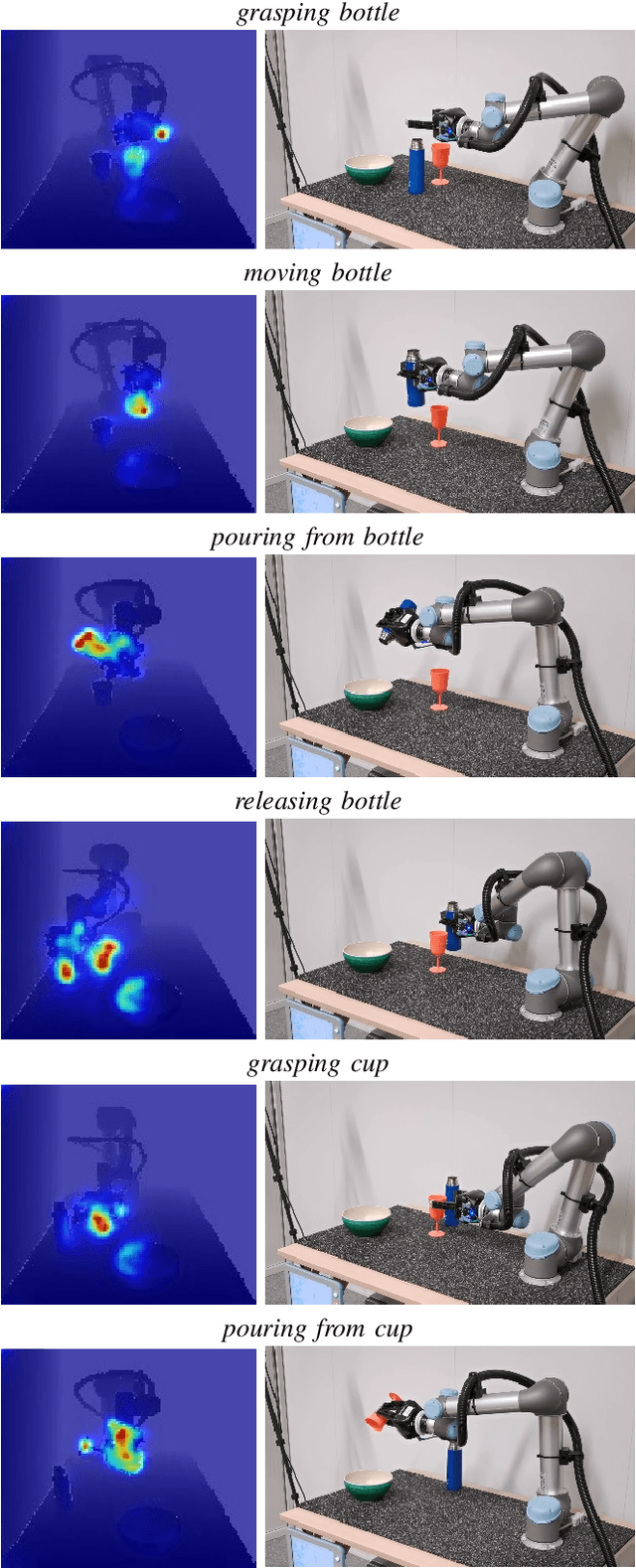

Manipulation tasks such as preparing a meal or assembling furniture remain highly challenging for robotics and vision. The supervised approach of imitation learning can handle short tasks but suffers from compounding errors and the need of many demonstrations for longer and more complex tasks. Reinforcement learning (RL) can find solutions beyond demonstrations but requires tedious and task-specific reward engineering for multi-step problems. In this work we address the difficulties of both methods and explore their combination. To this end, we propose a RL policies operating on pre-trained skills, that can learn composite manipulations using no intermediate rewards and no demonstrations of full tasks. We also propose an efficient training of basic skills from few synthetic demonstrated trajectories by exploring recent CNN architectures and data augmentation. We show successful learning of policies for composite manipulation tasks such as making a simple breakfast. Notably, our method achieves high success rates on a real robot, while using synthetic training data only.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge