COLT: Towards Completeness-Oriented Tool Retrieval for Large Language Models

Paper and Code

May 25, 2024

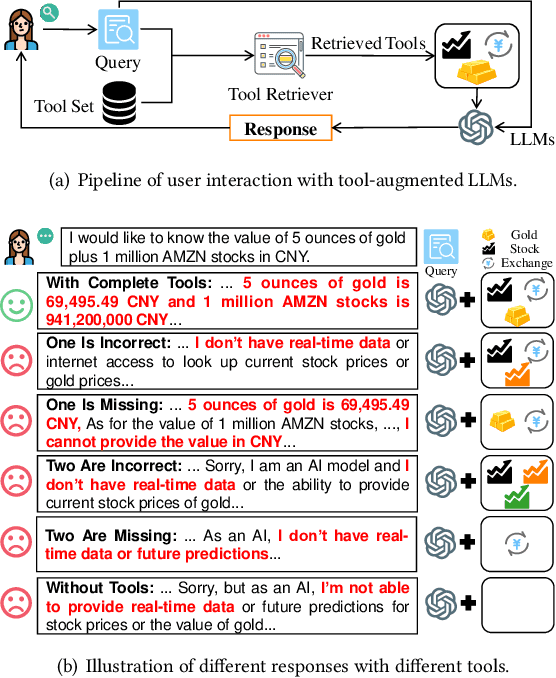

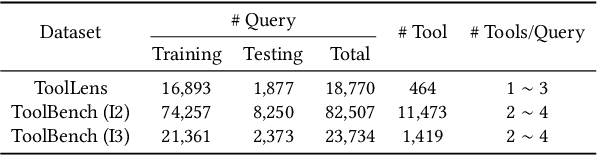

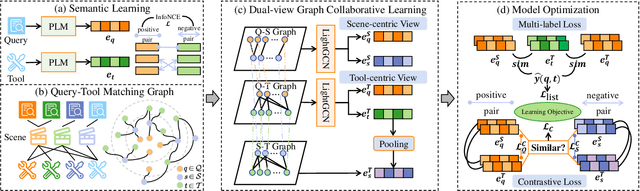

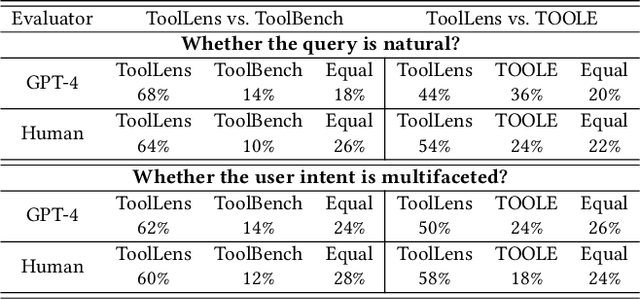

Recently, the integration of external tools with Large Language Models (LLMs) has emerged as a promising approach to overcome the inherent constraints of their pre-training data. However, realworld applications often involve a diverse range of tools, making it infeasible to incorporate all tools directly into LLMs due to constraints on input length and response time. Therefore, to fully exploit the potential of tool-augmented LLMs, it is crucial to develop an effective tool retrieval system. Existing tool retrieval methods techniques mainly rely on semantic matching between user queries and tool descriptions, which often results in the selection of redundant tools. As a result, these methods fail to provide a complete set of diverse tools necessary for addressing the multifaceted problems encountered by LLMs. In this paper, we propose a novel modelagnostic COllaborative Learning-based Tool Retrieval approach, COLT, which captures not only the semantic similarities between user queries and tool descriptions but also takes into account the collaborative information of tools. Specifically, we first fine-tune the PLM-based retrieval models to capture the semantic relationships between queries and tools in the semantic learning stage. Subsequently, we construct three bipartite graphs among queries, scenes, and tools and introduce a dual-view graph collaborative learning framework to capture the intricate collaborative relationships among tools during the collaborative learning stage. Extensive experiments on both the open benchmark and the newly introduced ToolLens dataset show that COLT achieves superior performance. Notably, the performance of BERT-mini (11M) with our proposed model framework outperforms BERT-large (340M), which has 30 times more parameters. Additionally, we plan to publicly release the ToolLens dataset to support further research in tool retrieval.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge