Cocktail Edge Caching: Ride Dynamic Trends of Content Popularity with Ensemble Learning

Paper and Code

Jan 14, 2021

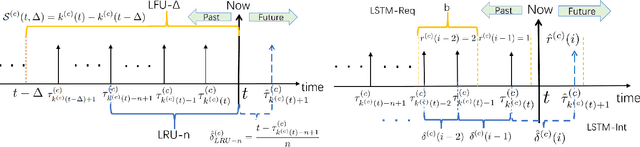

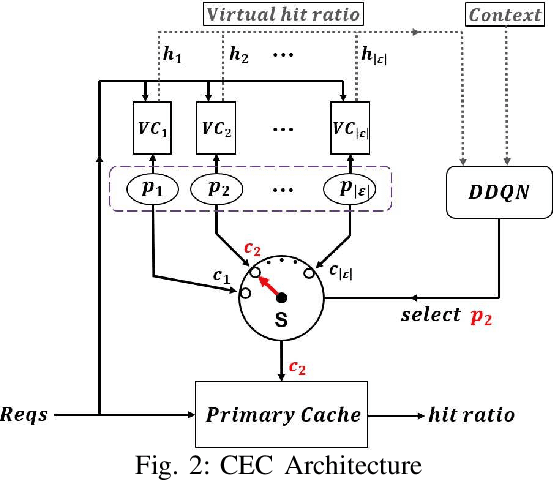

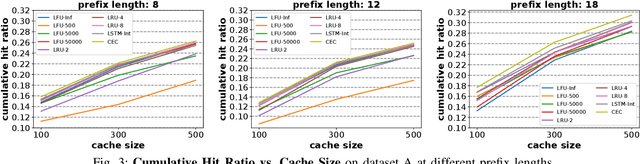

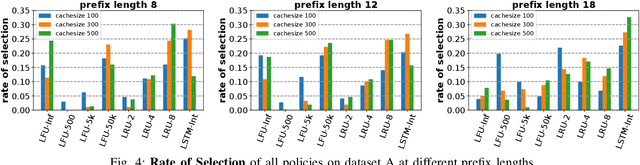

Edge caching will play a critical role in facilitating the emerging content-rich applications. However, it faces many new challenges, in particular, the highly dynamic content popularity and the heterogeneous caching configurations. In this paper, we propose Cocktail Edge Caching, that tackles the dynamic popularity and heterogeneity through ensemble learning. Instead of trying to find a single dominating caching policy for all the caching scenarios, we employ an ensemble of constituent caching policies and adaptively select the best-performing policy to control the cache. Towards this goal, we first show through formal analysis and experiments that different variations of the LFU and LRU policies have complementary performance in different caching scenarios. We further develop a novel caching algorithm that enhances LFU/LRU with deep recurrent neural network (LSTM) based time-series analysis. Finally, we develop a deep reinforcement learning agent that adaptively combines base caching policies according to their virtual hit ratios on parallel virtual caches. Through extensive experiments driven by real content requests from two large video streaming platforms, we demonstrate that CEC not only consistently outperforms all single policies, but also improves the robustness of them. CEC can be well generalized to different caching scenarios with low computation overheads for deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge