Cloth Manipulation Using Random-Forest-Based Imitation Learning

Paper and Code

Jan 16, 2019

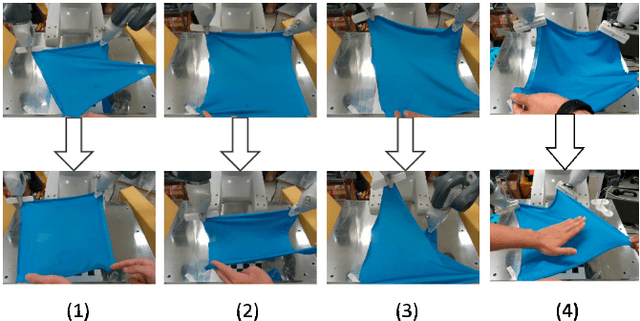

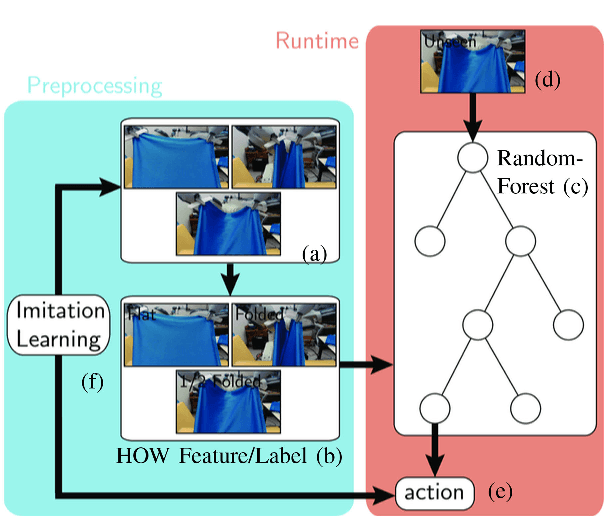

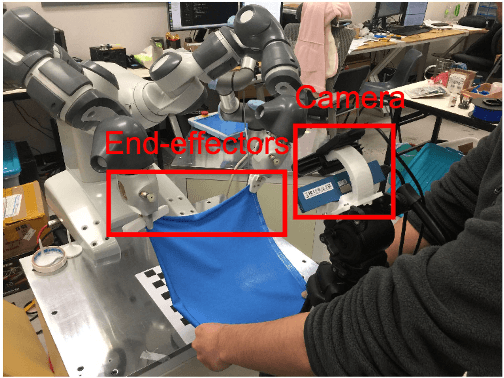

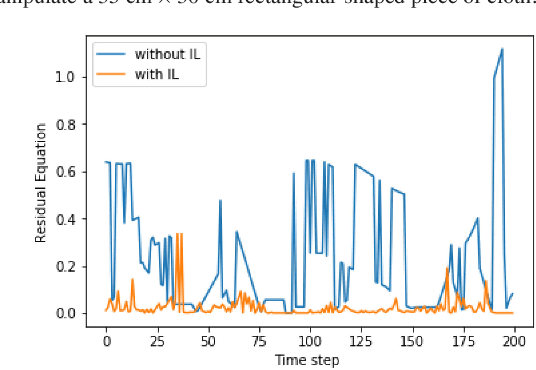

We present a novel approach for robust manipulation of high-DOF deformable objects such as cloth. Our approach uses a random forest-based controller that maps the observed visual features of the cloth to an optimal control action of the manipulator. The topological structure of this random forest-based controller is determined automatically based on the training data consisting visual features and optimal control actions. This enables us to integrate the overall process of training data classification and controller optimization into an imitation learning (IL) approach. Our approach enables learning of robust control policy for cloth manipulation with guarantees on convergence.We have evaluated our approach on different multi-task cloth manipulation benchmarks such as flattening, folding and twisting. In practice, our approach works well with different deformable features learned based on the specific task or deep learning. Moreover, our controller outperforms a simple or piecewise linear controller in terms of robustness to noise. In addition, our approach is easy to implement and does not require much parameter tuning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge