Bridging the Language Gap: Knowledge Injected Multilingual Question Answering

Paper and Code

Apr 06, 2023

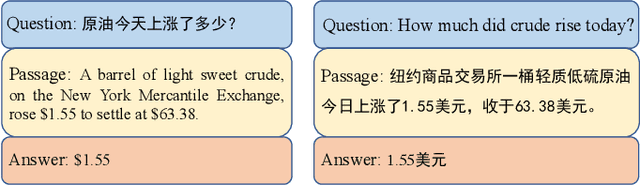

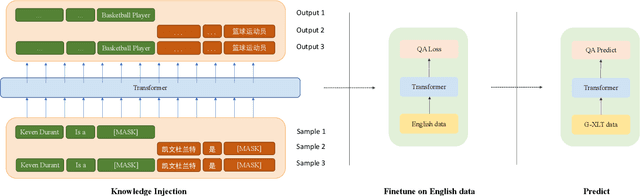

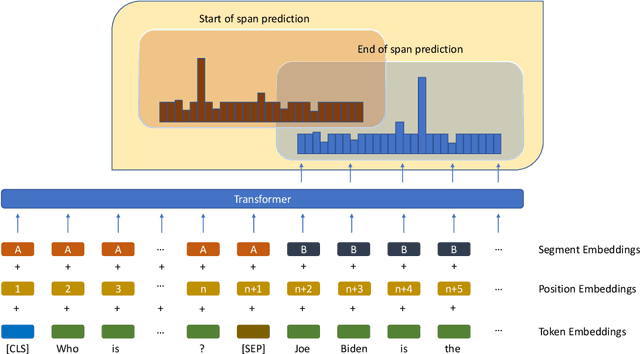

Question Answering (QA) is the task of automatically answering questions posed by humans in natural languages. There are different settings to answer a question, such as abstractive, extractive, boolean, and multiple-choice QA. As a popular topic in natural language processing tasks, extractive question answering task (extractive QA) has gained extensive attention in the past few years. With the continuous evolvement of the world, generalized cross-lingual transfer (G-XLT), where question and answer context are in different languages, poses some unique challenges over cross-lingual transfer (XLT), where question and answer context are in the same language. With the boost of corresponding development of related benchmarks, many works have been done to improve the performance of various language QA tasks. However, only a few works are dedicated to the G-XLT task. In this work, we propose a generalized cross-lingual transfer framework to enhance the model's ability to understand different languages. Specifically, we first assemble triples from different languages to form multilingual knowledge. Since the lack of knowledge between different languages greatly limits models' reasoning ability, we further design a knowledge injection strategy via leveraging link prediction techniques to enrich the model storage of multilingual knowledge. In this way, we can profoundly exploit rich semantic knowledge. Experiment results on real-world datasets MLQA demonstrate that the proposed method can improve the performance by a large margin, outperforming the baseline method by 13.18%/12.00% F1/EM on average.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge