Boosting In-Context Learning with Factual Knowledge

Paper and Code

Sep 26, 2023

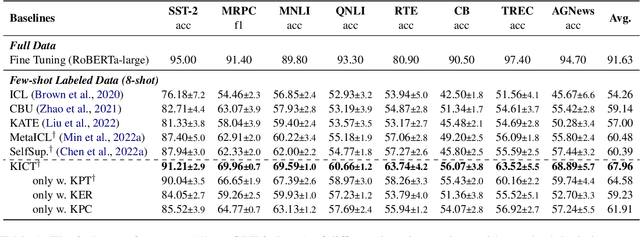

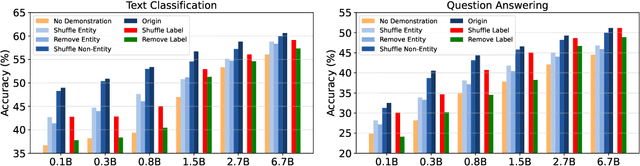

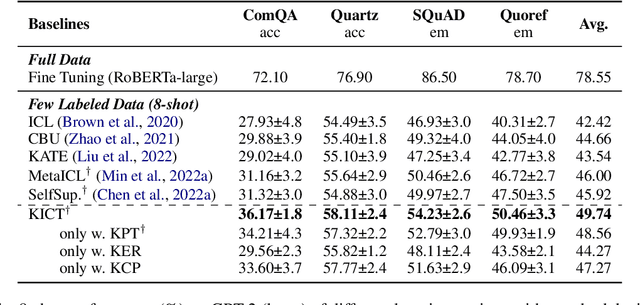

In-Context Learning (ICL) over Large language models (LLMs) aims at solving previously unseen tasks by conditioning on a few training examples, eliminating the need for parameter updates and achieving competitive performance. In this paper, we demonstrate that factual knowledge is imperative for the performance of ICL in three core facets, i.e., the inherent knowledge learned in LLMs, the factual knowledge derived from the selected in-context examples, and the knowledge biases in LLMs for output generation. To unleash the power of LLMs in few-shot learning scenarios, we introduce a novel Knowledgeable In-Context Tuning (KICT) framework to further improve the performance of ICL: 1) injecting factual knowledge to LLMs during continual self-supervised pre-training, 2) judiciously selecting the examples with high knowledge relevance, and 3) calibrating the prediction results based on prior knowledge. We evaluate the proposed approaches on auto-regressive LLMs (e.g., GPT-style models) over multiple text classification and question answering tasks. Experimental results demonstrate that KICT substantially outperforms strong baselines, and improves by more than 13% and 7% of accuracy on text classification and question answering tasks, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge