BiTraP: Bi-directional Pedestrian Trajectory Prediction with Multi-modal Goal Estimation

Paper and Code

Jul 29, 2020

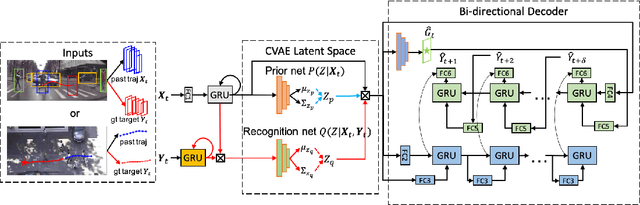

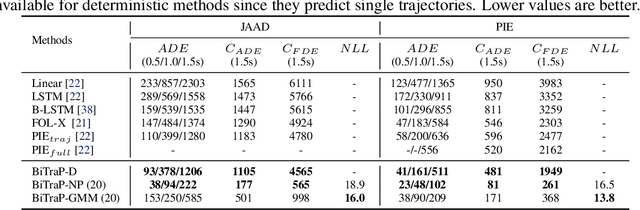

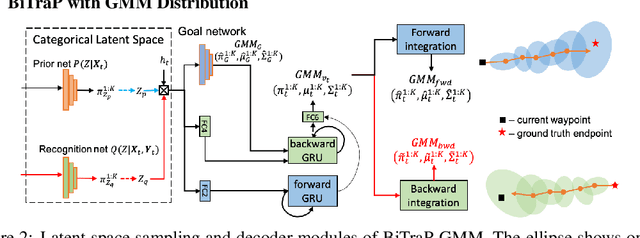

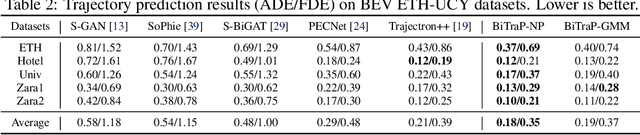

Pedestrian trajectory prediction is an essential task in robotic applications such as autonomous driving and robot navigation. State-of-the-art trajectory predictors use a conditional variational autoencoder (CVAE) with recurrent neural networks (RNNs) to encode observed trajectories and decode multi-modal future trajectories. This process can suffer from accumulated errors over long prediction horizons (>=2 seconds). This paper presents BiTraP, a goal-conditioned bi-directional multi-modal trajectory prediction method based on the CVAE. BiTraP estimates the goal (end-point) of trajectories and introduces a novel bi-directional decoder to improve longer-term trajectory prediction accuracy. Extensive experiments show that BiTraP generalizes to both first-person view (FPV) and bird's-eye view (BEV) scenarios and outperforms state-of-the-art results by ~10-50%. We also show that different choices of non-parametric versus parametric target models in the CVAE directly influence the predicted multi-modal trajectory distributions. These results provide guidance on trajectory predictor design for robotic applications such as collision avoidance and navigation systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge