Bayesian Optimization with Missing Inputs

Paper and Code

Jun 19, 2020

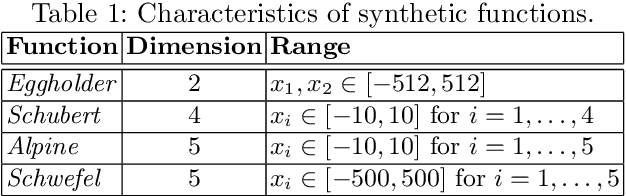

Bayesian optimization (BO) is an efficient method for optimizing expensive black-box functions. In real-world applications, BO often faces a major problem of missing values in inputs. The missing inputs can happen in two cases. First, the historical data for training BO often contain missing values. Second, when performing the function evaluation (e.g. computing alloy strength in a heat treatment process), errors may occur (e.g. a thermostat stops working) leading to an erroneous situation where the function is computed at a random unknown value instead of the suggested value. To deal with this problem, a common approach just simply skips data points where missing values happen. Clearly, this naive method cannot utilize data efficiently and often leads to poor performance. In this paper, we propose a novel BO method to handle missing inputs. We first find a probability distribution of each missing value so that we can impute the missing value by drawing a sample from its distribution. We then develop a new acquisition function based on the well-known Upper Confidence Bound (UCB) acquisition function, which considers the uncertainty of imputed values when suggesting the next point for function evaluation. We conduct comprehensive experiments on both synthetic and real-world applications to show the usefulness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge