AutoIP: A United Framework to Integrate Physics into Gaussian Processes

Paper and Code

Feb 24, 2022

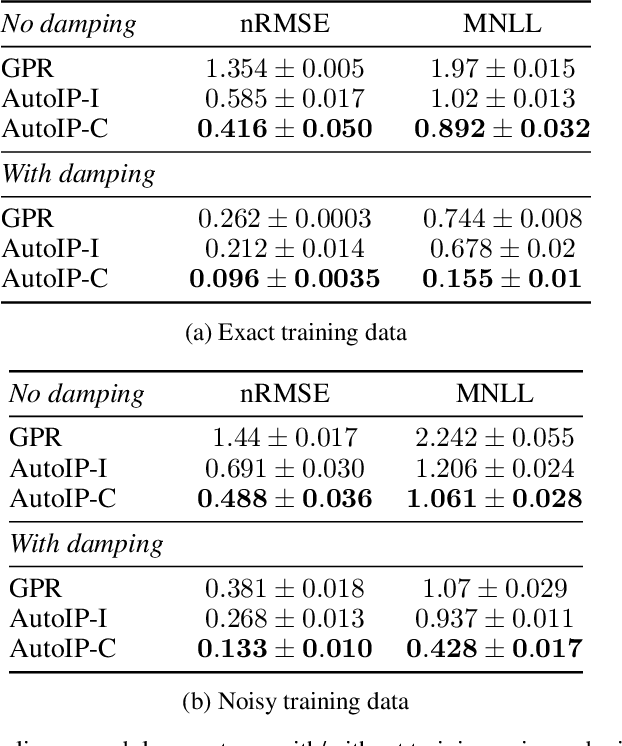

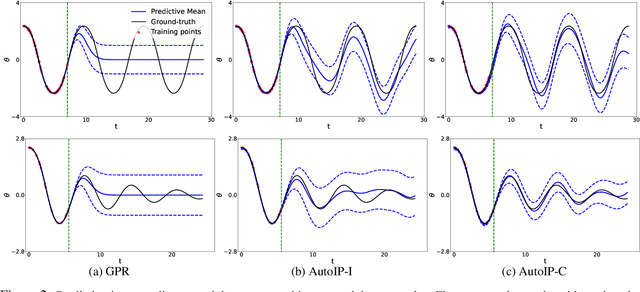

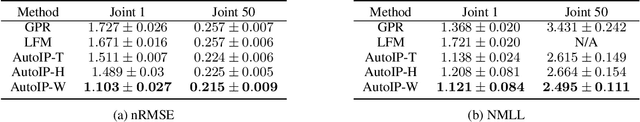

Physics modeling is critical for modern science and engineering applications. From data science perspective, physics knowledge -- often expressed as differential equations -- is valuable in that it is highly complementary to data, and can potentially help overcome data sparsity, noise, inaccuracy, etc. In this work, we propose a simple yet powerful framework that can integrate all kinds of differential equations into Gaussian processes (GPs) to enhance prediction accuracy and uncertainty quantification. These equations can be linear, nonlinear, temporal, time-spatial, complete, incomplete with unknown source terms, etc. Specifically, based on kernel differentiation, we construct a GP prior to jointly sample the values of the target function, equation-related derivatives, and latent source functions from a multivariate Gaussian distribution. The sampled values are fed to two likelihoods -- one is to fit the observations and the other to conform to the equation. We use the whitening trick to evade the strong dependency between the sampled function values and kernel parameters, and develop a stochastic variational learning algorithm. Our method shows improvement upon vanilla GPs in both simulation and several real-world applications, even using rough, incomplete equations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge