Attribute Guided Sparse Tensor-Based Model for Person Re-Identification

Paper and Code

Jul 29, 2021

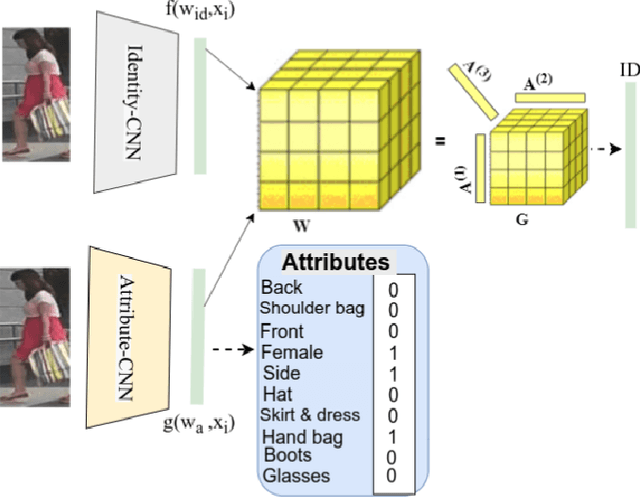

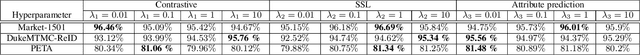

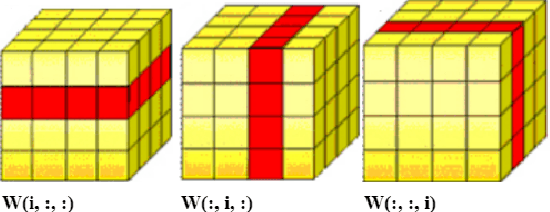

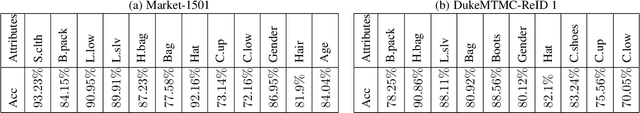

Visual perception of a person is easily influenced by many factors such as camera parameters, pose and viewpoint variations. These variations make person Re-Identification (ReID) a challenging problem. Nevertheless, human attributes usually stand as robust visual properties to such variations. In this paper, we propose a new method to leverage features from human attributes for person ReID. Our model uses a tensor to non-linearly fuse identity and attribute features, and then forces the parameters of the tensor in the loss function to generate discriminative fused features for ReID. Since tensor-based methods usually contain a large number of parameters, training all of these parameters becomes very slow, and the chance of overfitting increases as well. To address this issue, we propose two new techniques based on Structural Sparsity Learning (SSL) and Tensor Decomposition (TD) methods to create an accurate and stable learning problem. We conducted experiments on several standard pedestrian datasets, and experimental results indicate that our tensor-based approach significantly improves person ReID baselines and also outperforms state of the art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge