Attentive Semantic Alignment with Offset-Aware Correlation Kernels

Paper and Code

Oct 26, 2018

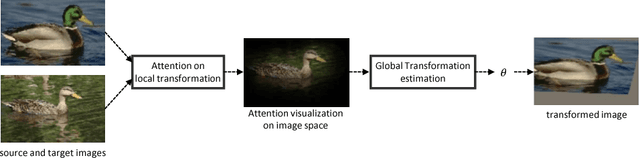

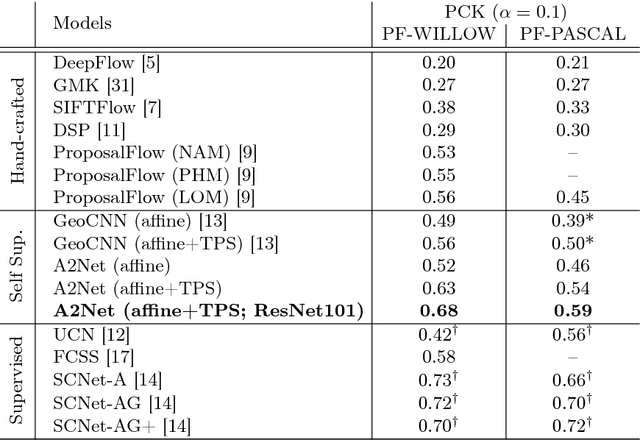

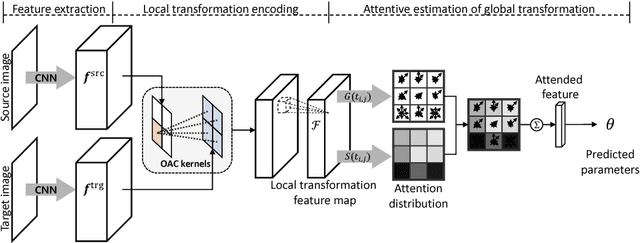

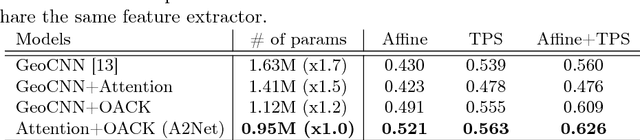

Semantic correspondence is the problem of establishing correspondences across images depicting different instances of the same object or scene class. One of recent approaches to this problem is to estimate parameters of a global transformation model that densely aligns one image to the other. Since an entire correlation map between all feature pairs across images is typically used to predict such a global transformation, noisy features from different backgrounds, clutter, and occlusion distract the predictor from correct estimation of the alignment. This is a challenging issue, in particular, in the problem of semantic correspondence where a large degree of image variations is often involved. In this paper, we introduce an attentive semantic alignment method that focuses on reliable correlations, filtering out distractors. For effective attention, we also propose an offset-aware correlation kernel that learns to capture translation-invariant local transformations in computing correlation values over spatial locations. Experiments demonstrate the effectiveness of the attentive model and offset-aware kernel, and the proposed model combining both techniques achieves the state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge