All in One and One for All: A Simple yet Effective Method towards Cross-domain Graph Pretraining

Paper and Code

Feb 15, 2024

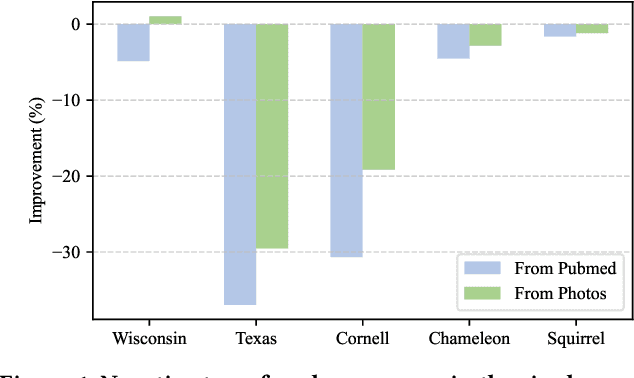

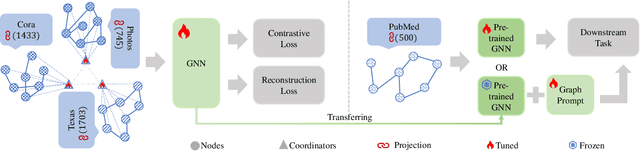

Large Language Models (LLMs) have revolutionized the fields of computer vision (CV) and natural language processing (NLP). One of the most notable advancements of LLMs is that a single model is trained on vast and diverse datasets spanning multiple domains -- a paradigm we term `All in One'. This methodology empowers LLMs with super generalization capabilities, facilitating an encompassing comprehension of varied data distributions. Leveraging these capabilities, a single LLM demonstrates remarkable versatility across a variety of domains -- a paradigm we term `One for All'. However, applying this idea to the graph field remains a formidable challenge, with cross-domain pretraining often resulting in negative transfer. This issue is particularly important in few-shot learning scenarios, where the paucity of training data necessitates the incorporation of external knowledge sources. In response to this challenge, we propose a novel approach called Graph COordinators for PrEtraining (GCOPE), that harnesses the underlying commonalities across diverse graph datasets to enhance few-shot learning. Our novel methodology involves a unification framework that amalgamates disparate graph datasets during the pretraining phase to distill and transfer meaningful knowledge to target tasks. Extensive experiments across multiple graph datasets demonstrate the superior efficacy of our approach. By successfully leveraging the synergistic potential of multiple graph datasets for pretraining, our work stands as a pioneering contribution to the realm of graph foundational model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge