AI-Enhanced Intensive Care Unit: Revolutionizing Patient Care with Pervasive Sensing

Paper and Code

Mar 11, 2023

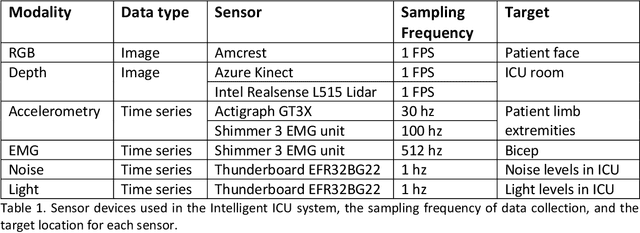

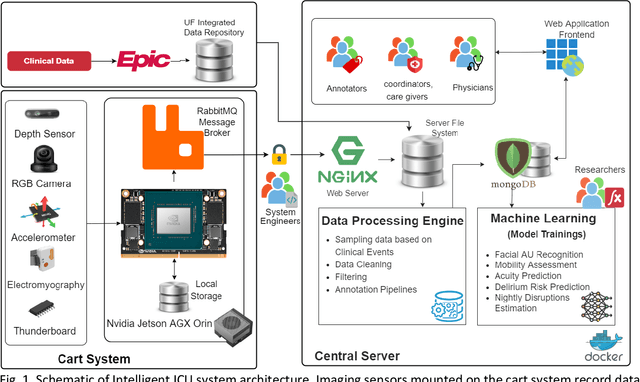

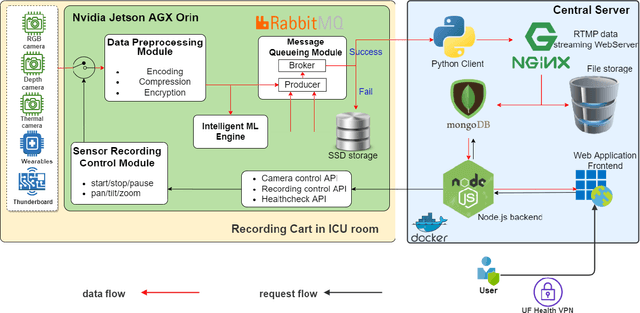

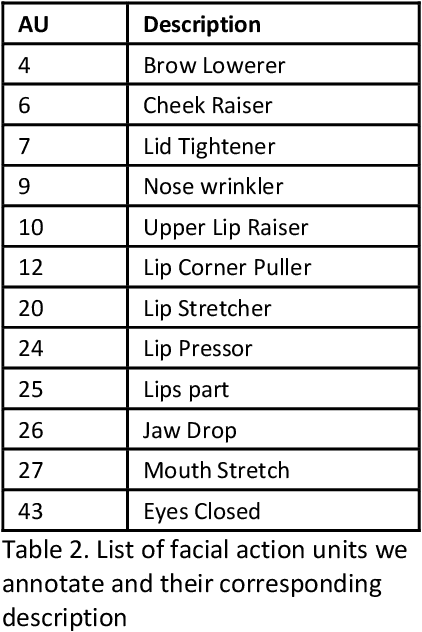

The intensive care unit (ICU) is a specialized hospital space where critically ill patients receive intensive care and monitoring. Comprehensive monitoring is imperative in assessing patients conditions, in particular acuity, and ultimately the quality of care. However, the extent of patient monitoring in the ICU is limited due to time constraints and the workload on healthcare providers. Currently, visual assessments for acuity, including fine details such as facial expressions, posture, and mobility, are sporadically captured, or not captured at all. These manual observations are subjective to the individual, prone to documentation errors, and overburden care providers with the additional workload. Artificial Intelligence (AI) enabled systems has the potential to augment the patient visual monitoring and assessment due to their exceptional learning capabilities. Such systems require robust annotated data to train. To this end, we have developed pervasive sensing and data processing system which collects data from multiple modalities depth images, color RGB images, accelerometry, electromyography, sound pressure, and light levels in ICU for developing intelligent monitoring systems for continuous and granular acuity, delirium risk, pain, and mobility assessment. This paper presents the Intelligent Intensive Care Unit (I2CU) system architecture we developed for real-time patient monitoring and visual assessment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge