Adversarial Text-to-Image Synthesis: A Review

Paper and Code

Jan 25, 2021

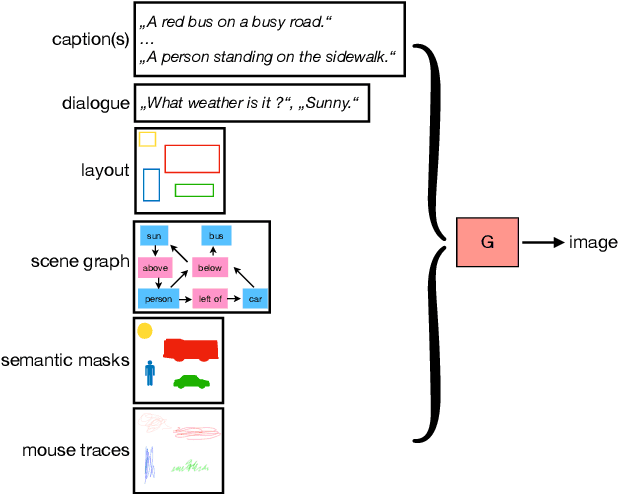

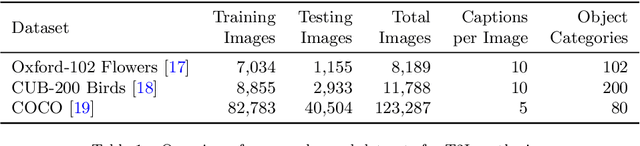

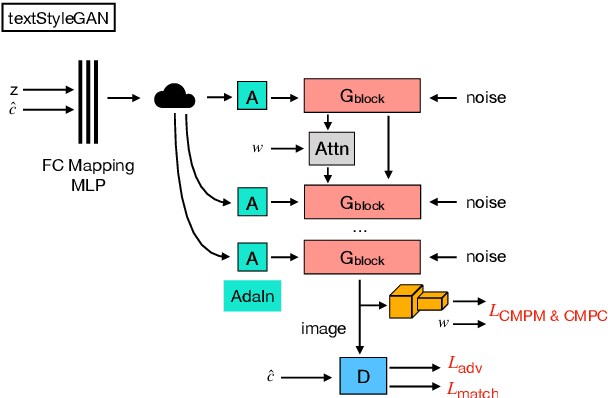

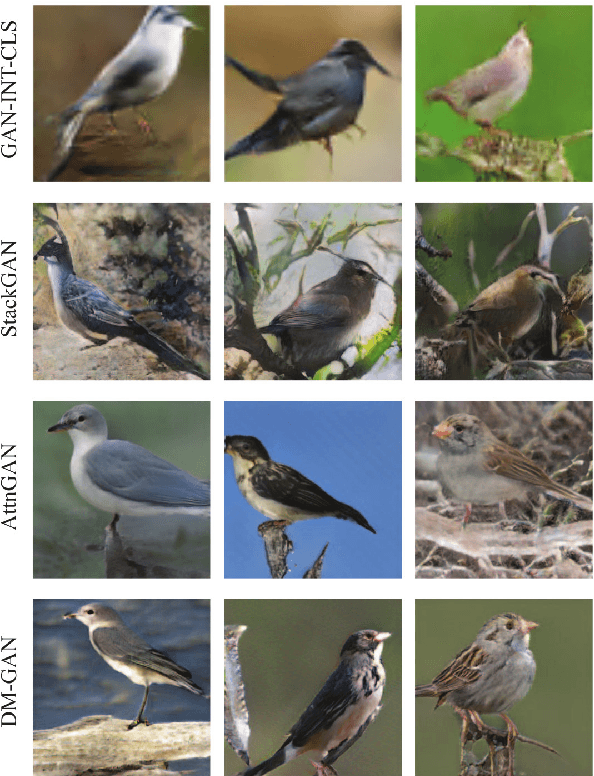

With the advent of generative adversarial networks, synthesizing images from textual descriptions has recently become an active research area. It is a flexible and intuitive way for conditional image generation with significant progress in the last years regarding visual realism, diversity, and semantic alignment. However, the field still faces several challenges that require further research efforts such as enabling the generation of high-resolution images with multiple objects, and developing suitable and reliable evaluation metrics that correlate with human judgement. In this review, we contextualize the state of the art of adversarial text-to-image synthesis models, their development since their inception five years ago, and propose a taxonomy based on the level of supervision. We critically examine current strategies to evaluate text-to-image synthesis models, highlight shortcomings, and identify new areas of research, ranging from the development of better datasets and evaluation metrics to possible improvements in architectural design and model training. This review complements previous surveys on generative adversarial networks with a focus on text-to-image synthesis which we believe will help researchers to further advance the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge