Adversarial Imitation Attack

Paper and Code

Mar 31, 2020

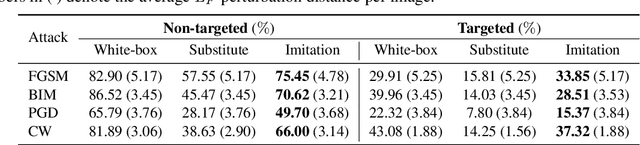

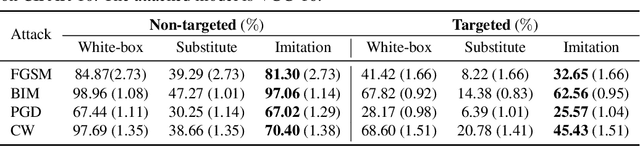

Deep learning models are known to be vulnerable to adversarial examples. A practical adversarial attack should require as little as possible knowledge of attacked models. Current substitute attacks need pre-trained models to generate adversarial examples and their attack success rates heavily rely on the transferability of adversarial examples. Current score-based and decision-based attacks require lots of queries for the attacked models. In this study, we propose a novel adversarial imitation attack. First, it produces a replica of the attacked model by a two-player game like the generative adversarial networks (GANs). The objective of the generative model is to generate examples that lead the imitation model returning different outputs with the attacked model. The objective of the imitation model is to output the same labels with the attacked model under the same inputs. Then, the adversarial examples generated by the imitation model are utilized to fool the attacked model. Compared with the current substitute attacks, imitation attacks can use less training data to produce a replica of the attacked model and improve the transferability of adversarial examples. Experiments demonstrate that our imitation attack requires less training data than the black-box substitute attacks, but achieves an attack success rate close to the white-box attack on unseen data with no query.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge