Adversarial Defense via Data Dependent Activation Function and Total Variation Minimization

Paper and Code

Sep 23, 2018

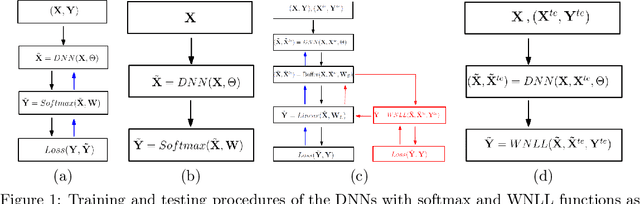

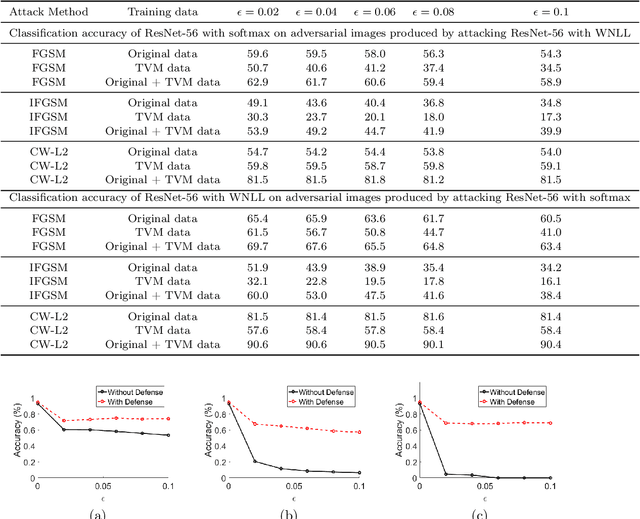

We improve the robustness of deep neural nets to adversarial attacks by using an interpolating function as the output activation. This data-dependent activation function remarkably improves both classification accuracy and stability to adversarial perturbations. Together with the total variation minimization of adversarial images and augmented training, under the strongest attack, we achieve up to 20.6$\%$, 50.7$\%$, and 68.7$\%$ accuracy improvement w.r.t. the fast gradient sign method, iterative fast gradient sign method, and Carlini-Wagner $L_2$ attacks, respectively. Our defense strategy is additive to many of the existing methods. We give an intuitive explanation of our defense strategy via analyzing the geometry of the feature space. For reproducibility, the code is made available at: https://github.com/BaoWangMath/DNN-DataDependentActivation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge