ABIDES-Gym: Gym Environments for Multi-Agent Discrete Event Simulation and Application to Financial Markets

Paper and Code

Oct 27, 2021

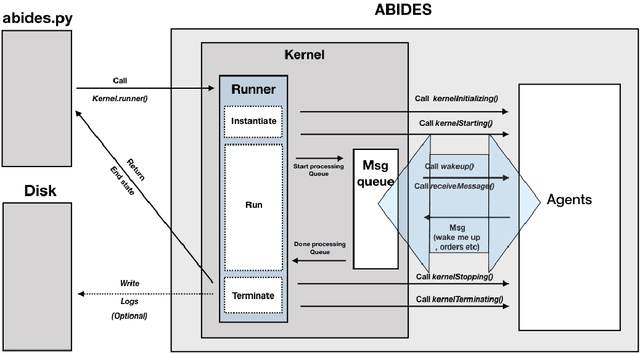

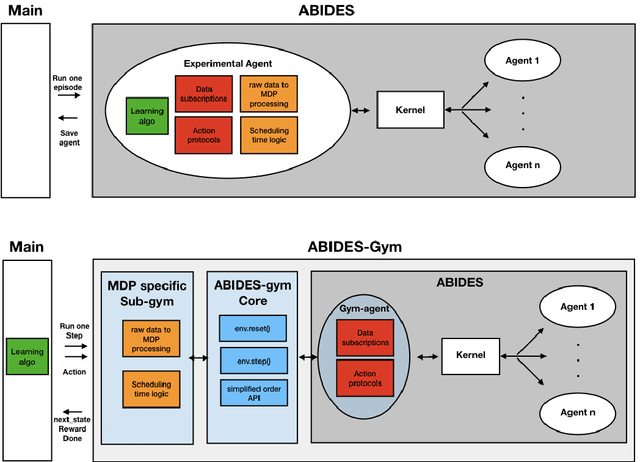

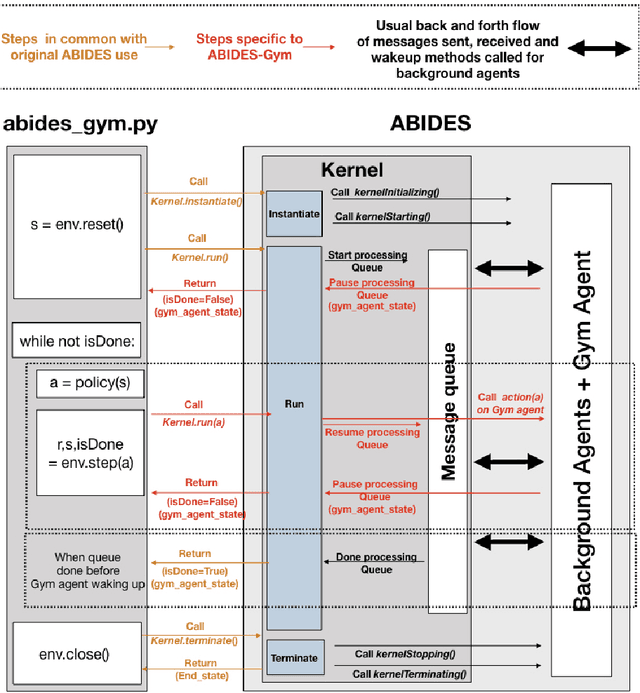

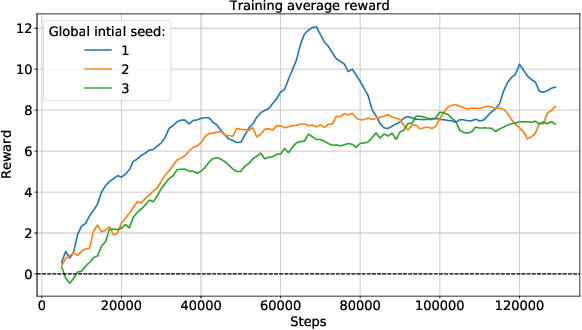

Model-free Reinforcement Learning (RL) requires the ability to sample trajectories by taking actions in the original problem environment or a simulated version of it. Breakthroughs in the field of RL have been largely facilitated by the development of dedicated open source simulators with easy to use frameworks such as OpenAI Gym and its Atari environments. In this paper we propose to use the OpenAI Gym framework on discrete event time based Discrete Event Multi-Agent Simulation (DEMAS). We introduce a general technique to wrap a DEMAS simulator into the Gym framework. We expose the technique in detail and implement it using the simulator ABIDES as a base. We apply this work by specifically using the markets extension of ABIDES, ABIDES-Markets, and develop two benchmark financial markets OpenAI Gym environments for training daily investor and execution agents. As a result, these two environments describe classic financial problems with a complex interactive market behavior response to the experimental agent's action.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge