A Unified RGB-T Saliency Detection Benchmark: Dataset, Baselines, Analysis and A Novel Approach

Paper and Code

Jan 11, 2017

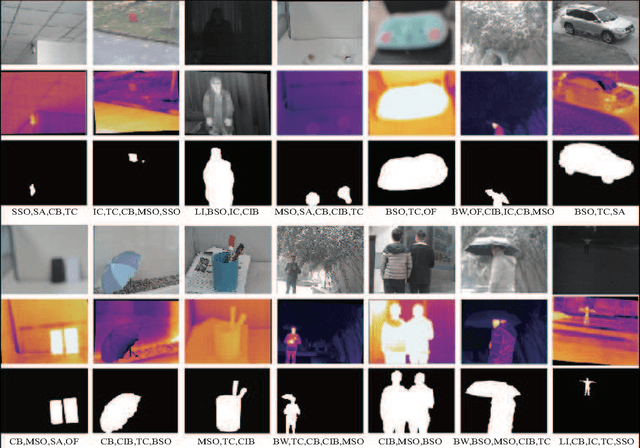

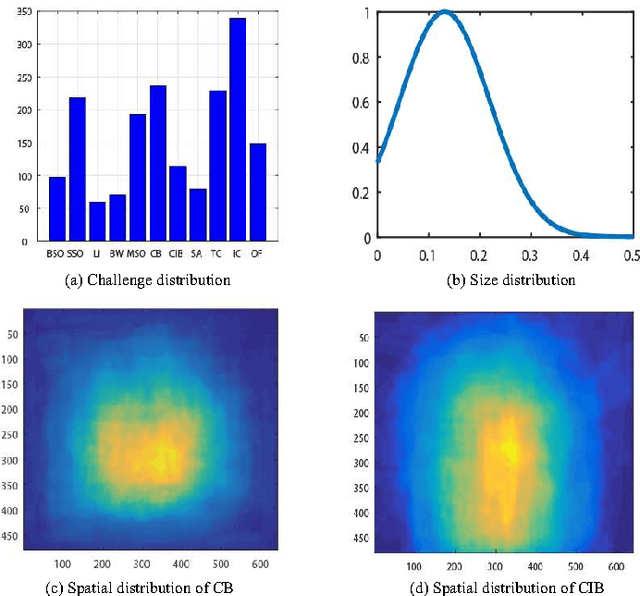

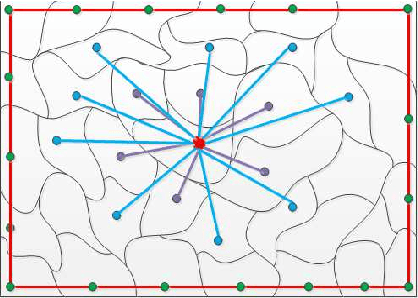

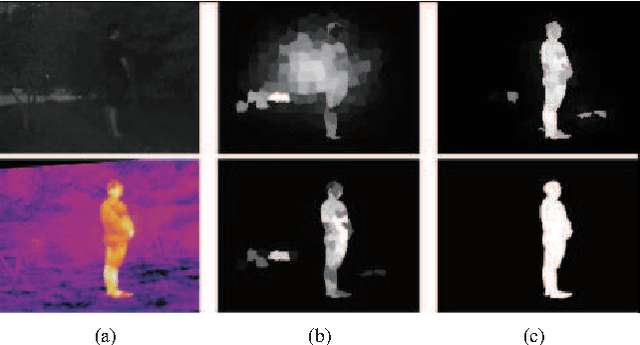

Despite significant progress, image saliency detection still remains a challenging task in complex scenes and environments. Integrating multiple different but complementary cues, like RGB and Thermal (RGB-T), may be an effective way for boosting saliency detection performance. The current research in this direction, however, is limited by the lack of a comprehensive benchmark. This work contributes such a RGB-T image dataset, which includes 821 spatially aligned RGB-T image pairs and their ground truth annotations for saliency detection purpose. The image pairs are with high diversity recorded under different scenes and environmental conditions, and we annotate 11 challenges on these image pairs for performing the challenge-sensitive analysis for different saliency detection algorithms. We also implement 3 kinds of baseline methods with different modality inputs to provide a comprehensive comparison platform. With this benchmark, we propose a novel approach, multi-task manifold ranking with cross-modality consistency, for RGB-T saliency detection. In particular, we introduce a weight for each modality to describe the reliability, and integrate them into the graph-based manifold ranking algorithm to achieve adaptive fusion of different source data. Moreover, we incorporate the cross-modality consistent constraints to integrate different modalities collaboratively. For the optimization, we design an efficient algorithm to iteratively solve several subproblems with closed-form solutions. Extensive experiments against other baseline methods on the newly created benchmark demonstrate the effectiveness of the proposed approach, and we also provide basic insights and potential future research directions for RGB-T saliency detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge