A Unified Multi-Task Semantic Communication System for Multimodal Data

Paper and Code

Sep 16, 2022

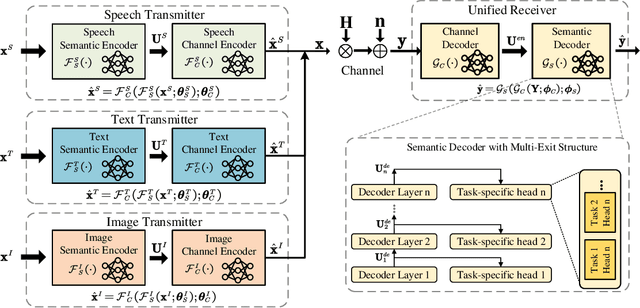

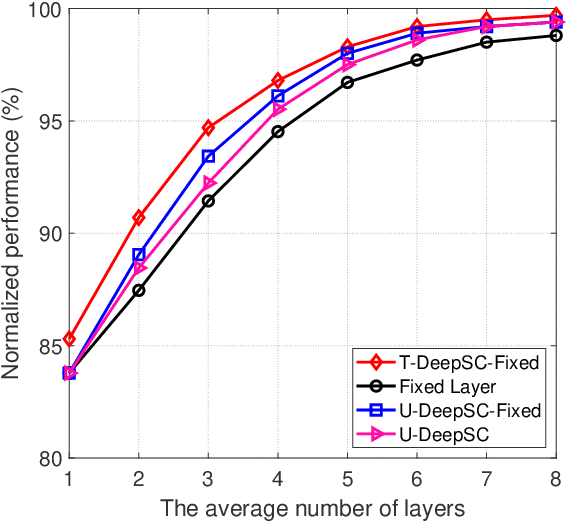

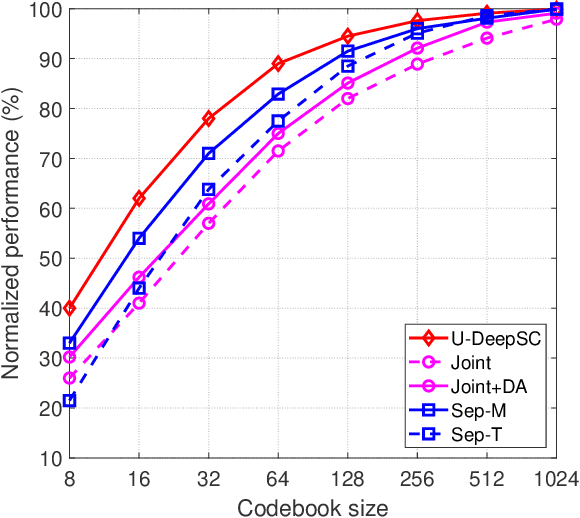

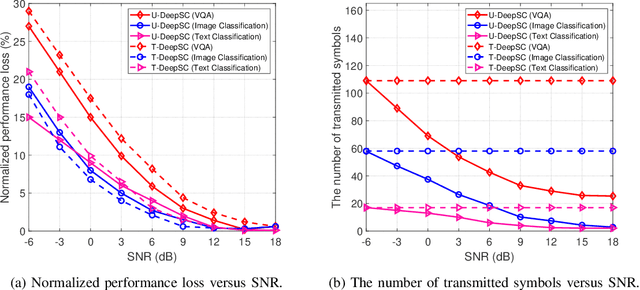

Task-oriented semantic communication has achieved significant performance gains. However, the model has to be updated once the task is changed or multiple models need to be stored for serving different tasks. To address this issue, we develop a unified deep learning enabled semantic communication system (U-DeepSC), where a unified end-to-end framework can serve many different tasks with multiple modalities. As the difficulty varies from different tasks, different numbers of neural network layers are required for various tasks. We develop a multi-exit architecture in U-DeepSC to provide early-exit results for relatively simple tasks. To reduce the transmission overhead, we design a unified codebook for feature representation for serving multiple tasks, in which only the indices of these task-specific features in the codebook are transmitted. Moreover, we propose a dimension-wise dynamic scheme that can adjust the number of transmitted indices for different tasks as the number of required features varies from task to task. Furthermore, our dynamic scheme can adaptively adjust the numbers of transmitted features under different channel conditions to optimize the transmission efficiency. According to simulation results, the proposed U-DeepSC achieves comparable performance to the task-oriented semantic communication system designed for a specific task but with significant reduction in both transmission overhead and model size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge