A Two-stream End-to-End Deep Learning Network for Recognizing Atypical Visual Attention in Autism Spectrum Disorder

Paper and Code

Nov 26, 2019

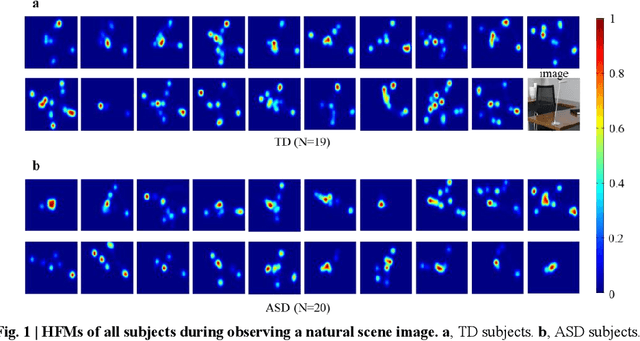

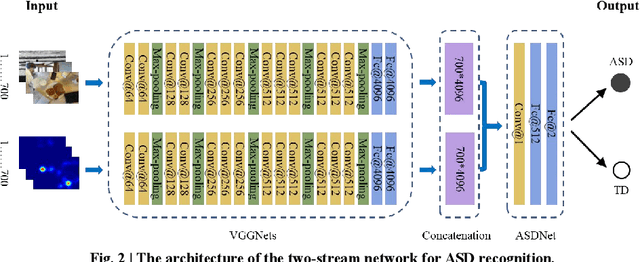

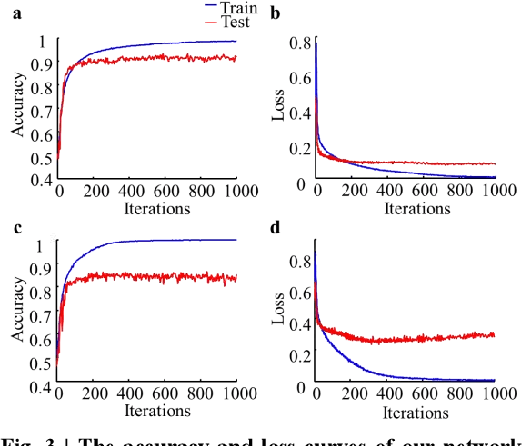

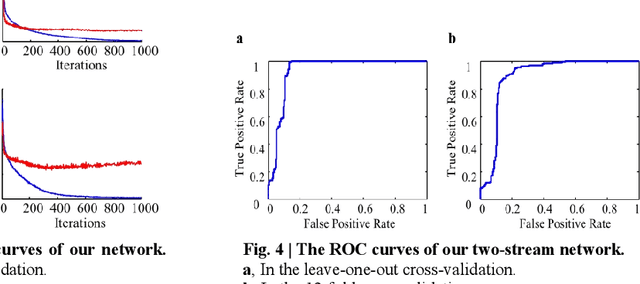

Eye movements have been widely investigated to study the atypical visual attention in Autism Spectrum Disorder (ASD). The majority of these studies have been focused on limited eye movement features by statistical comparisons between ASD and Typically Developing (TD) groups, which make it difficult to accurately separate ASD from TD at the individual level. The deep learning technology has been highly successful in overcoming this issue by automatically extracting features important for classification through a data-driven learning process. However, there is still a lack of end-to-end deep learning framework for recognition of abnormal attention in ASD. In this study, we developed a novel two-stream deep learning network for this recognition based on 700 images and corresponding eye movement patterns of ASD and TD, and obtained an accuracy of 0.95, which was higher than the previous state-of-the-art. We next characterized contributions to the classification at the single image level and non-linearly integration of this single image level information during the classification. Moreover, we identified a group of pixel-level visual features within these images with greater impacts on the classification. Together, this two-stream deep learning network provides us a novel and powerful tool to recognize and understand abnormal visual attention in ASD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge