A New Benchmark for Evaluation of Cross-Domain Few-Shot Learning

Paper and Code

Dec 16, 2019

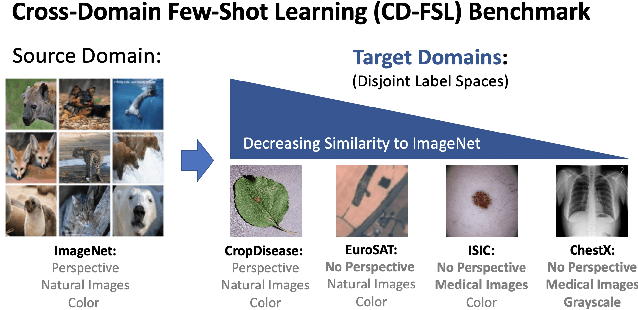

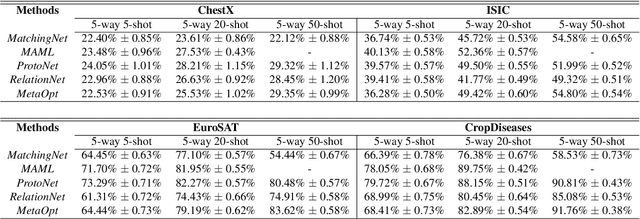

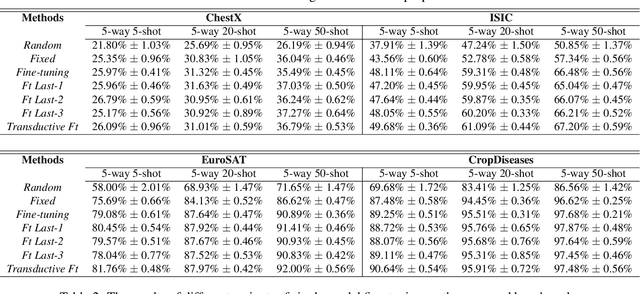

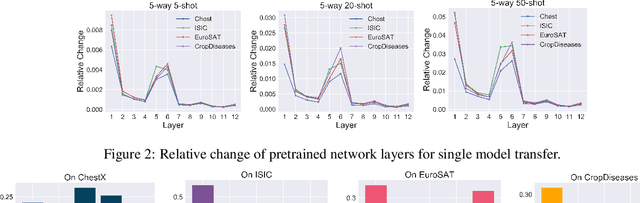

Recent progress on few-shot learning has largely re-lied on annotated data for meta-learning, sampled from the same domain as the novel classes. However, in many applications, collecting data for meta-learning is infeasible or impossible. This leads to the cross-domain few-shot learn-ing problem, where a large domain shift exists between base and novel classes. Although some preliminary investigation of the few-shot methods under domain shift exists, a standard benchmark for cross-domain few-shot learning is not yet established. In this paper, we propose the cross-domain few-shot learning (CD-FSL) benchmark, consist-ing of images from diverse domains with varying similarity to ImageNet, ranging from crop disease images, satellite images, and medical images. Extensive experiments on the proposed benchmark are performed to compare an array of state-of-art meta-learning and transfer learning approaches, including various forms of single model fine-tuning and ensemble learning. The results demonstrate that current meta-learning methods underperform in relation to simple fine-tuning by 12.8% average accuracy. Accuracy of all methods tend to correlate with dataset similarity toImageNet. In addition, the relative performance gain with increasing number of shots is greater with transfer methods compared to meta-learning. Finally, we demonstrate that transferring from multiple pretrained models achieves best performance, with accuracy improvements of 14.9% and 1.9% versus the best of meta-learning and single model fine-tuning approaches, respectively. In summary, the proposed benchmark serves as a challenging platform to guide future research on cross-domain few-shot learning due to its spectrum of diversity and coverage

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge