A Multi-Hypothesis Approach to Pose Ambiguity in Object-Based SLAM

Paper and Code

Aug 03, 2021

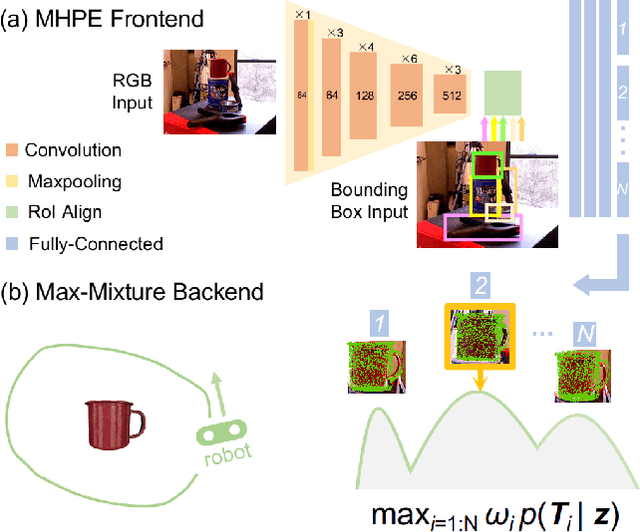

In object-based Simultaneous Localization and Mapping (SLAM), 6D object poses offer a compact representation of landmark geometry useful for downstream planning and manipulation tasks. However, measurement ambiguity then arises as objects may possess complete or partial object shape symmetries (e.g., due to occlusion), making it difficult or impossible to generate a single consistent object pose estimate. One idea is to generate multiple pose candidates to counteract measurement ambiguity. In this paper, we develop a novel approach that enables an object-based SLAM system to reason about multiple pose hypotheses for an object, and synthesize this locally ambiguous information into a globally consistent robot and landmark pose estimation formulation. In particular, we (1) present a learned pose estimation network that provides multiple hypotheses about the 6D pose of an object; (2) by treating the output of our network as components of a mixture model, we incorporate pose predictions into a SLAM system, which, over successive observations, recovers a globally consistent set of robot and object (landmark) pose estimates. We evaluate our approach on the popular YCB-Video Dataset and a simulated video featuring YCB objects. Experiments demonstrate that our approach is effective in improving the robustness of object-based SLAM in the face of object pose ambiguity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge