A geometry-aware deep network for depth estimation in monocular endoscopy

Paper and Code

Apr 20, 2023

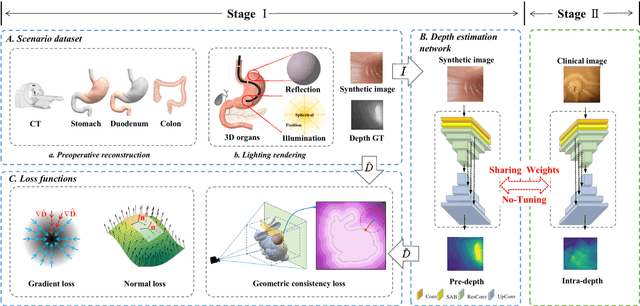

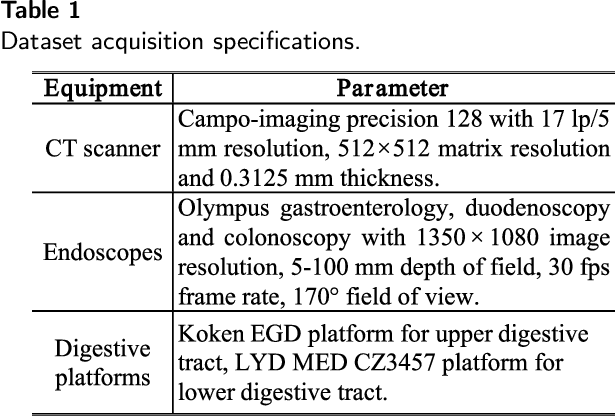

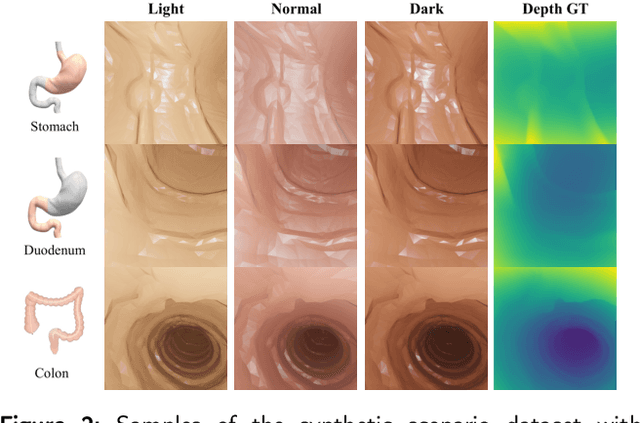

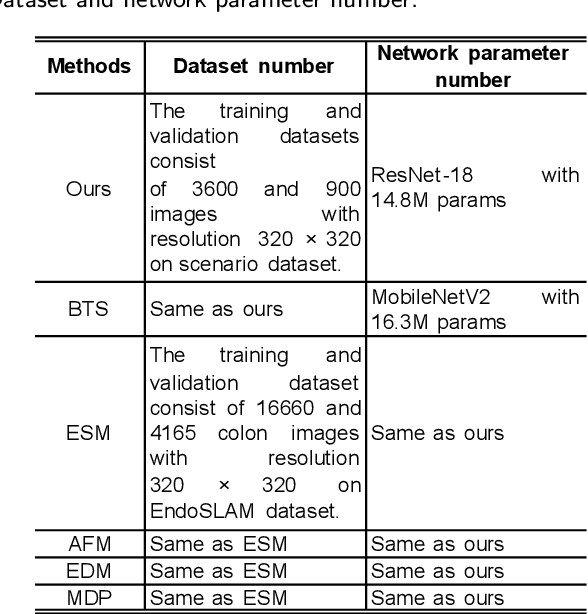

Monocular depth estimation is critical for endoscopists to perform spatial perception and 3D navigation of surgical sites. However, most of the existing methods ignore the important geometric structural consistency, which inevitably leads to performance degradation and distortion of 3D reconstruction. To address this issue, we introduce a gradient loss to penalize edge fluctuations ambiguous around stepped edge structures and a normal loss to explicitly express the sensitivity to frequently small structures, and propose a geometric consistency loss to spreads the spatial information across the sample grids to constrain the global geometric anatomy structures. In addition, we develop a synthetic RGB-Depth dataset that captures the anatomical structures under reflections and illumination variations. The proposed method is extensively validated across different datasets and clinical images and achieves mean RMSE values of 0.066 (stomach), 0.029 (small intestine), and 0.139 (colon) on the EndoSLAM dataset. The generalizability of the proposed method achieves mean RMSE values of 12.604 (T1-L1), 9.930 (T2-L2), and 13.893 (T3-L3) on the ColonDepth dataset. The experimental results show that our method exceeds previous state-of-the-art competitors and generates more consistent depth maps and reasonable anatomical structures. The quality of intraoperative 3D structure perception from endoscopic videos of the proposed method meets the accuracy requirements of video-CT registration algorithms for endoscopic navigation. The dataset and the source code will be available at https://github.com/YYM-SIA/LINGMI-MR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge