Ziyue Zou

Graph Neural Network-State Predictive Information Bottleneck (GNN-SPIB) approach for learning molecular thermodynamics and kinetics

Sep 18, 2024Abstract:Molecular dynamics simulations offer detailed insights into atomic motions but face timescale limitations. Enhanced sampling methods have addressed these challenges but even with machine learning, they often rely on pre-selected expert-based features. In this work, we present the Graph Neural Network-State Predictive Information Bottleneck (GNN-SPIB) framework, which combines graph neural networks and the State Predictive Information Bottleneck to automatically learn low-dimensional representations directly from atomic coordinates. Tested on three benchmark systems, our approach predicts essential structural, thermodynamic and kinetic information for slow processes, demonstrating robustness across diverse systems. The method shows promise for complex systems, enabling effective enhanced sampling without requiring pre-defined reaction coordinates or input features.

Enhanced sampling of Crystal Nucleation with Graph Representation Learnt Variables

Oct 11, 2023Abstract:In this study, we present a graph neural network-based learning approach using an autoencoder setup to derive low-dimensional variables from features observed in experimental crystal structures. These variables are then biased in enhanced sampling to observe state-to-state transitions and reliable thermodynamic weights. Our approach uses simple convolution and pooling methods. To verify the effectiveness of our protocol, we examined the nucleation of various allotropes and polymorphs of iron and glycine from their molten states. Our graph latent variables when biased in well-tempered metadynamics consistently show transitions between states and achieve accurate free energy calculations in agreement with experiments, both of which are indicators of dependable sampling. This underscores the strength and promise of our graph neural net variables for improved sampling. The protocol shown here should be applicable for other systems and with other sampling methods.

Enhanced Sampling with Machine Learning: A Review

Jun 16, 2023

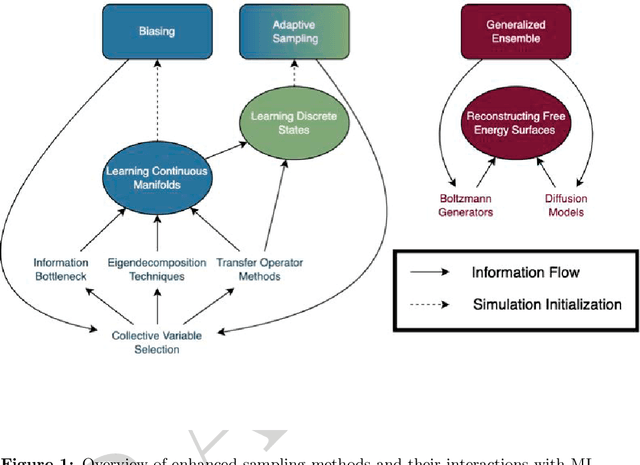

Abstract:Molecular dynamics (MD) enables the study of physical systems with excellent spatiotemporal resolution but suffers from severe time-scale limitations. To address this, enhanced sampling methods have been developed to improve exploration of configurational space. However, implementing these is challenging and requires domain expertise. In recent years, integration of machine learning (ML) techniques in different domains has shown promise, prompting their adoption in enhanced sampling as well. Although ML is often employed in various fields primarily due to its data-driven nature, its integration with enhanced sampling is more natural with many common underlying synergies. This review explores the merging of ML and enhanced MD by presenting different shared viewpoints. It offers a comprehensive overview of this rapidly evolving field, which can be difficult to stay updated on. We highlight successful strategies like dimensionality reduction, reinforcement learning, and flow-based methods. Finally, we discuss open problems at the exciting ML-enhanced MD interface.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge