Zihao Ai

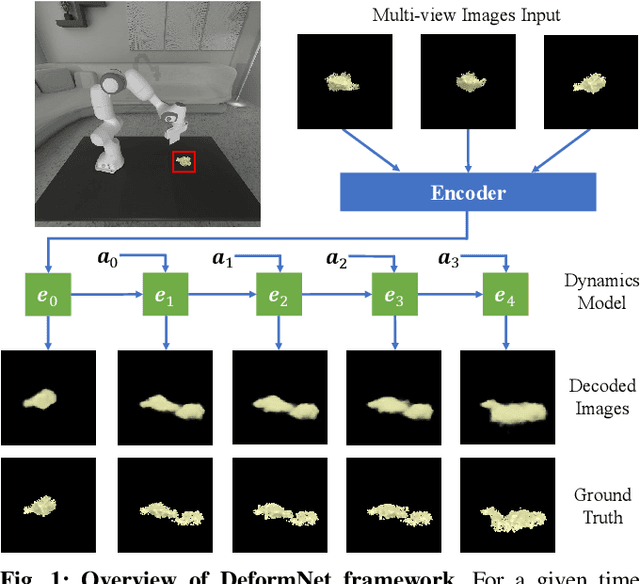

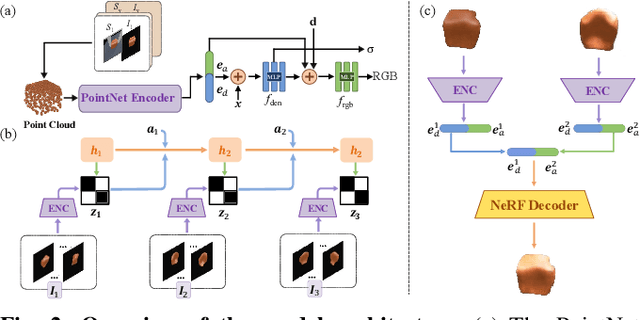

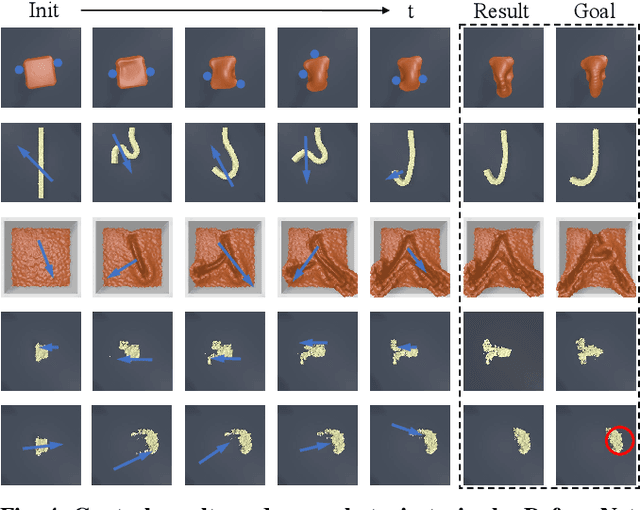

DeformNet: Latent Space Modeling and Dynamics Prediction for Deformable Object Manipulation

Feb 12, 2024

Abstract:Manipulating deformable objects is a ubiquitous task in household environments, demanding adequate representation and accurate dynamics prediction due to the objects' infinite degrees of freedom. This work proposes DeformNet, which utilizes latent space modeling with a learned 3D representation model to tackle these challenges effectively. The proposed representation model combines a PointNet encoder and a conditional neural radiance field (NeRF), facilitating a thorough acquisition of object deformations and variations in lighting conditions. To model the complex dynamics, we employ a recurrent state-space model (RSSM) that accurately predicts the transformation of the latent representation over time. Extensive simulation experiments with diverse objectives demonstrate the generalization capabilities of DeformNet for various deformable object manipulation tasks, even in the presence of previously unseen goals. Finally, we deploy DeformNet on an actual UR5 robotic arm to demonstrate its capability in real-world scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge