Zhongxi Zheng

Handling Noisy Labels via One-Step Abductive Multi-Target Learning

Nov 25, 2020

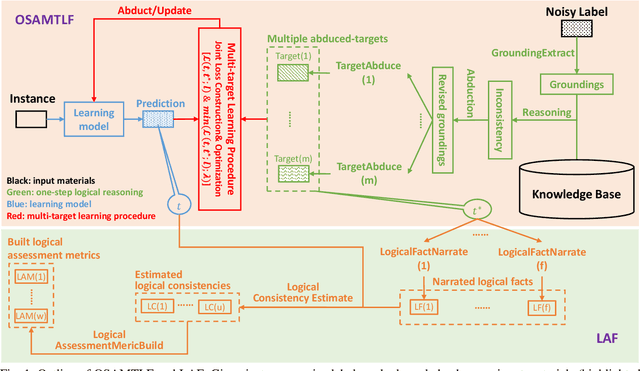

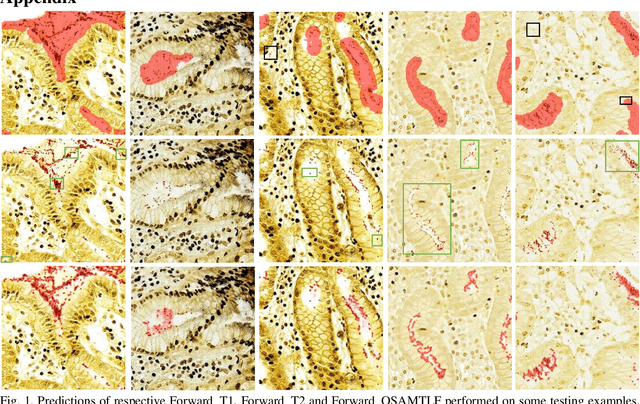

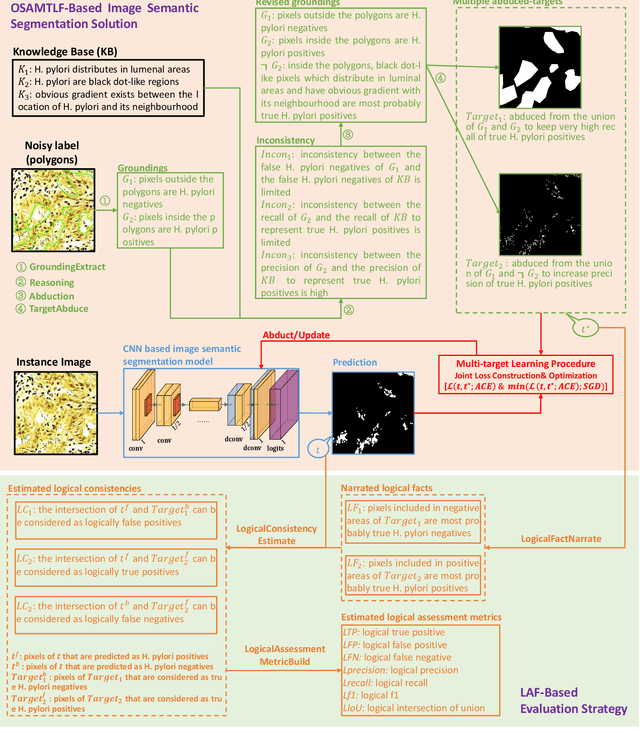

Abstract:Learning from noisy labels is an important concern because of the lack of accurate ground-truth labels in plenty of real-world scenarios. In practice, various approaches for this concern first make corrections corresponding to potentially noisy-labeled instances, and then update predictive model with information of the made corrections. However, in specific areas, such as medical histopathology whole slide image analysis (MHWSIA), it is often difficult or even impossible for experts to manually achieve the noisy-free ground-truth labels which leads to labels with heavy noise. This situation raises two more difficult problems: 1) the methodology of approaches making corrections corresponding to potentially noisy-labeled instances has limitations due to the heavy noise existing in labels; and 2) the appropriate evaluation strategy for validation/testing is unclear because of the great difficulty in collecting the noisy-free ground-truth labels. In this paper, we focus on alleviating these two problems. For the problem 1), we present a one-step abductive multi-target learning framework (OSAMTLF) that imposes a one-step logical reasoning upon machine learning via a multi-target learning procedure to abduct the predictions of the learning model to be subject to our prior knowledge. For the problem 2), we propose a logical assessment formula (LAF) that evaluates the logical rationality of the outputs of an approach by estimating the consistencies between the predictions of the learning model and the logical facts narrated from the results of the one-step logical reasoning of OSAMTLF. Applying OSAMTLF and LAF to the Helicobacter pylori (H. pylori) segmentation task in MHWSIA, we show that OSAMTLF is able to abduct the machine learning model achieving logically more rational predictions, which is beyond the capability of various state-of-the-art approaches for learning from noisy labels.

Moderately supervised learning: definition and framework

Aug 27, 2020

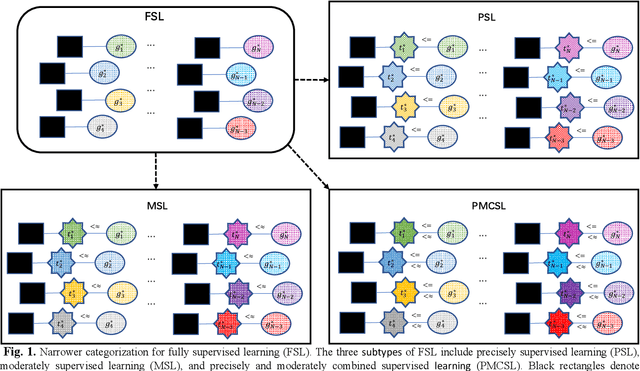

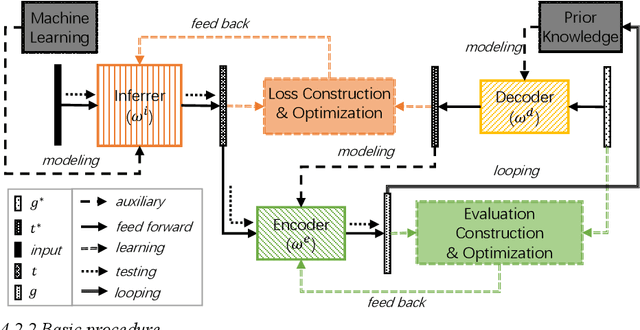

Abstract:Supervised learning (SL) has achieved remarkable success in numerous artificial intelligence applications. In the current literature, by referring to the properties of the ground-truth labels prepared for a training data set, SL is roughly categorized as fully supervised learning (FSL) and weakly supervised learning (WSL). However, solutions for various FSL tasks have shown that the given ground-truth labels are not always learnable, and the target transformation from the given ground-truth labels to learnable targets can significantly affect the performance of the final FSL solutions. Without considering the properties of the target transformation from the given ground-truth labels to learnable targets, the roughness of the FSL category conceals some details that can be critical to building the optimal solutions for some specific FSL tasks. Thus, it is desirable to reveal these details. This article attempts to achieve this goal by expanding the categorization of FSL and investigating the subtype that plays the central role in FSL. Taking into consideration the properties of the target transformation from the given ground-truth labels to learnable targets, we first categorize FSL into three narrower subtypes. Then, we focus on the subtype moderately supervised learning (MSL). MSL concerns the situation where the given ground-truth labels are ideal, but due to the simplicity in annotation of the given ground-truth labels, careful designs are required to transform the given ground-truth labels into learnable targets. From the perspectives of definition and framework, we comprehensively illustrate MSL to reveal what details are concealed by the roughness of the FSL category. Finally, discussions on the revealed details suggest that MSL should be given more attention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge