Zhiyong Tang

A novel gesture interaction control method for rehabilitation lower extremity exoskeleton

Apr 02, 2025

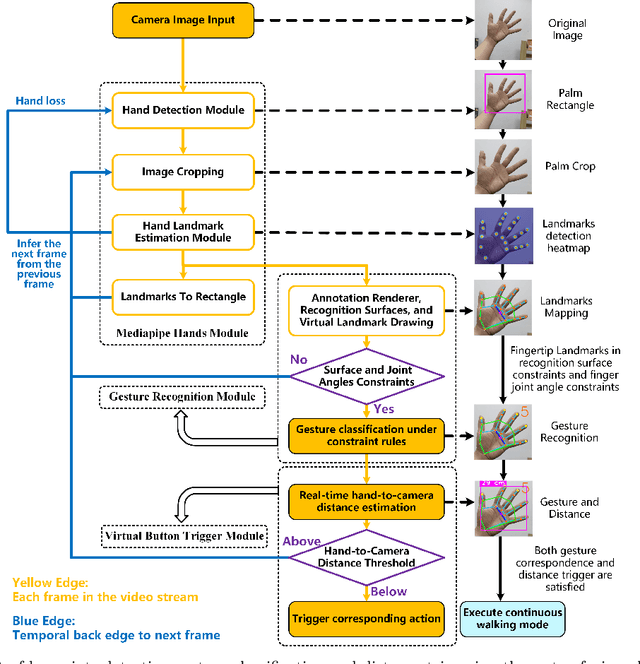

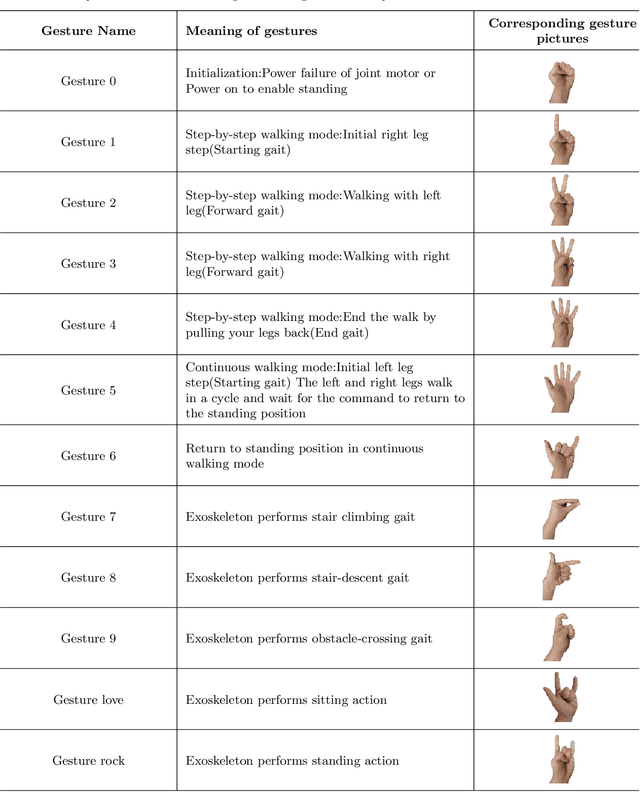

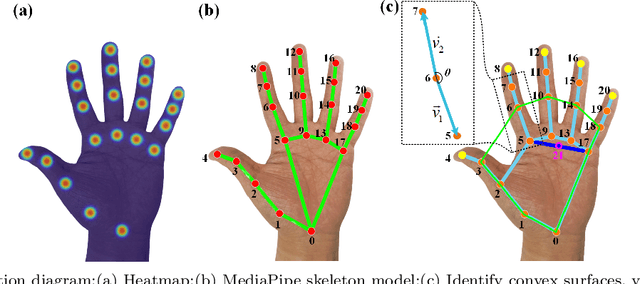

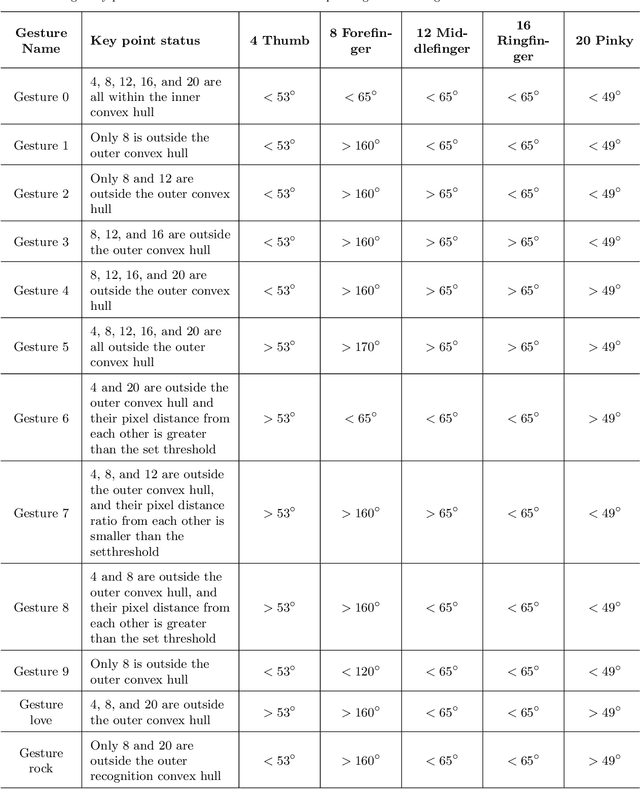

Abstract:With the rapid development of Rehabilitation Lower Extremity Robotic Exoskeletons (RLEEX) technology, significant advancements have been made in Human-Robot Interaction (HRI) methods. These include traditional physical HRI methods that are easily recognizable and various bio-electrical signal-based HRI methods that can visualize and predict actions. However, most of these HRI methods are contact-based, facing challenges such as operational complexity, sensitivity to interference, risks associated with implantable devices, and, most importantly, limitations in comfort. These challenges render the interaction less intuitive and natural, which can negatively impact patient motivation for rehabilitation. To address these issues, this paper proposes a novel non-contact gesture interaction control method for RLEEX, based on RGB monocular camera depth estimation. This method integrates three key steps: detecting keypoints, recognizing gestures, and assessing distance, thereby applying gesture information and augmented reality triggering technology to control gait movements of RLEEX. Results indicate that this approach provides a feasible solution to the problems of poor comfort, low reliability, and high latency in HRI for RLEEX platforms. Specifically, it achieves a gesture-controlled exoskeleton motion accuracy of 94.11\% and an average system response time of 0.615 seconds through non-contact HRI. The proposed non-contact HRI method represents a pioneering advancement in control interactions for RLEEX, paving the way for further exploration and development in this field.

StairNetV3: Depth-aware Stair Modeling using Deep Learning

Aug 13, 2023Abstract:Vision-based stair perception can help autonomous mobile robots deal with the challenge of climbing stairs, especially in unfamiliar environments. To address the problem that current monocular vision methods are difficult to model stairs accurately without depth information, this paper proposes a depth-aware stair modeling method for monocular vision. Specifically, we take the extraction of stair geometric features and the prediction of depth images as joint tasks in a convolutional neural network (CNN), with the designed information propagation architecture, we can achieve effective supervision for stair geometric feature learning by depth information. In addition, to complete the stair modeling, we take the convex lines, concave lines, tread surfaces and riser surfaces as stair geometric features and apply Gaussian kernels to enable the network to predict contextual information within the stair lines. Combined with the depth information obtained by depth sensors, we propose a stair point cloud reconstruction method that can quickly get point clouds belonging to the stair step surfaces. Experiments on our dataset show that our method has a significant improvement over the previous best monocular vision method, with an intersection over union (IOU) increase of 3.4 %, and the lightweight version has a fast detection speed and can meet the requirements of most real-time applications. Our dataset is available at https://data.mendeley.com/datasets/6kffmjt7g2/1.

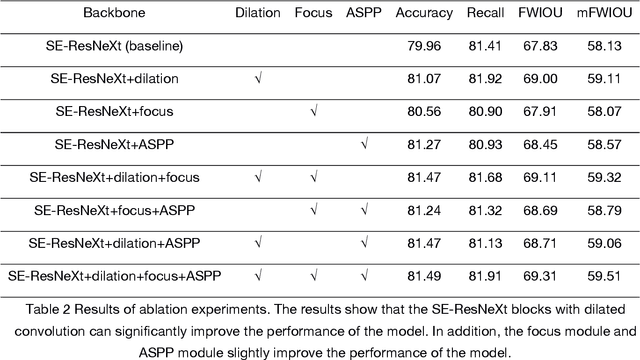

RGB-D-based Stair Detection using Deep Learning for Autonomous Stair Climbing

Dec 09, 2022Abstract:Stairs are common building structures in urban environments, and stair detection is an important part of environment perception for autonomous mobile robots. Most existing algorithms have difficulty combining the visual information from binocular sensors effectively and ensuring reliable detection at night and in the case of extremely fuzzy visual clues. To solve these problems, we propose a neural network architecture with RGB and depth map inputs. Specifically, we design a selective module, which can make the network learn the complementary relationship between the RGB map and the depth map and effectively combine the information from the RGB map and the depth map in different scenes. In addition, we design a line clustering algorithm for the postprocessing of detection results, which can make full use of the detection results to obtain the geometric stair parameters. Experiments on our dataset show that our method can achieve better accuracy and recall compared with existing state-of-the-art deep learning methods, which are 5.64% and 7.97%, respectively, and our method also has extremely fast detection speed. A lightweight version can achieve 300 + frames per second with the same resolution, which can meet the needs of most real-time detection scenes.

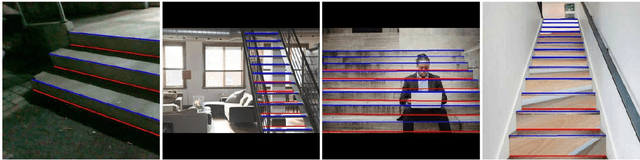

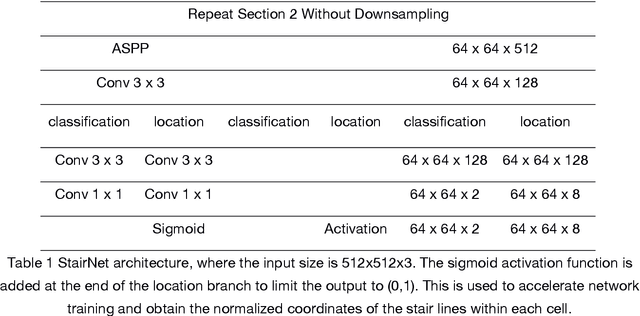

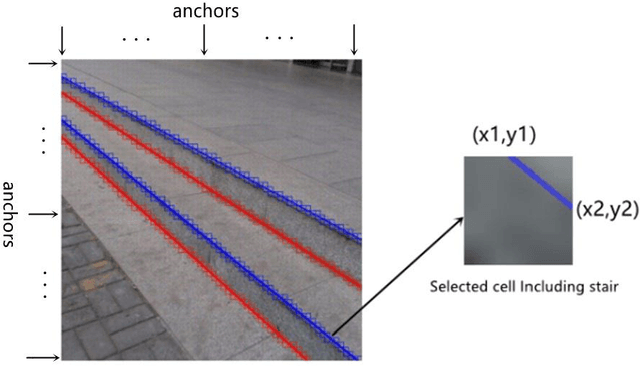

Deep Leaning-Based Ultra-Fast Stair Detection

Feb 04, 2022

Abstract:Staircases are some of the most common building structures in urban environments. Stair detection is an important task for various applications, including the environmental perception of exoskeleton robots, humanoid robots, and rescue robots and the navigation of visually impaired people. Most existing stair detection algorithms have difficulty dealing with the diversity of stair structure materials, extreme light and serious occlusion. Inspired by human perception, we propose an end-to-end method based on deep learning. Specifically, we treat the process of stair line detection as a multitask involving coarse-grained semantic segmentation and object detection. The input images are divided into cells, and a simple neural network is used to judge whether each cell contains stair lines. For cells containing stair lines, the locations of the stair lines relative to each cell are regressed. Extensive experiments on our dataset show that our method can achieve high performance in terms of both speed and accuracy. A lightweight version can even achieve 300+ frames per second with the same resolution. Our code and dataset will be soon available at GitHub.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge