Zhiuxan Liang

From Multi-agent to Multi-robot: A Scalable Training and Evaluation Platform for Multi-robot Reinforcement Learning

Jun 20, 2022

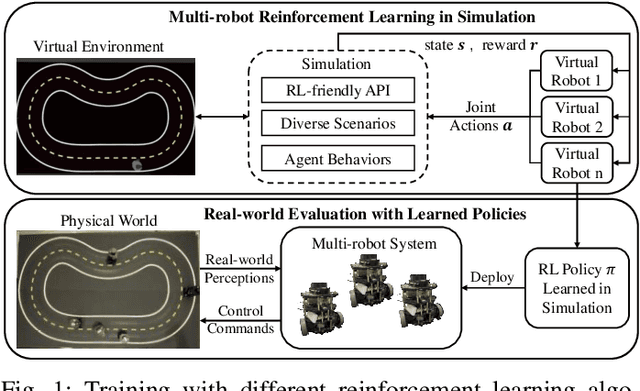

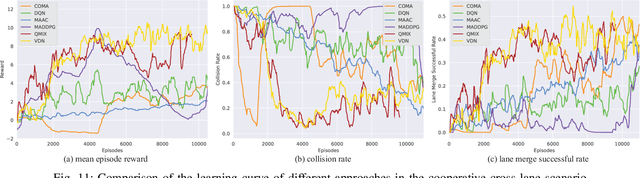

Abstract:Multi-agent reinforcement learning (MARL) has been gaining extensive attention from academia and industries in the past few decades. One of the fundamental problems in MARL is how to evaluate different approaches comprehensively. Most existing MARL methods are evaluated in either video games or simplistic simulated scenarios. It remains unknown how these methods perform in real-world scenarios, especially multi-robot systems. This paper introduces a scalable emulation platform for multi-robot reinforcement learning (MRRL) called SMART to meet this need. Precisely, SMART consists of two components: 1) a simulation environment that provides a variety of complex interaction scenarios for training and 2) a real-world multi-robot system for realistic performance evaluation. Besides, SMART offers agent-environment APIs that are plug-and-play for algorithm implementation. To illustrate the practicality of our platform, we conduct a case study on the cooperative driving lane change scenario. Building off the case study, we summarize several unique challenges of MRRL, which are rarely considered previously. Finally, we open-source the simulation environments, associated benchmark tasks, and state-of-the-art baselines to encourage and empower MRRL research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge