Zhimeng Pan

Review Regularized Neural Collaborative Filtering

Aug 20, 2020

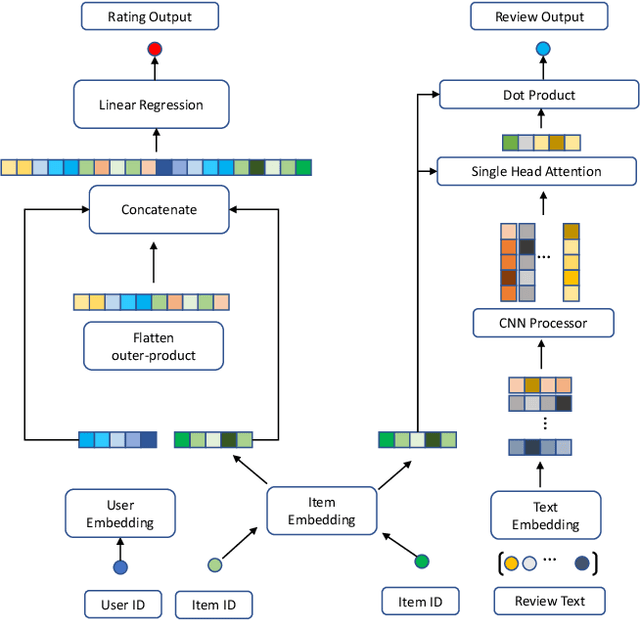

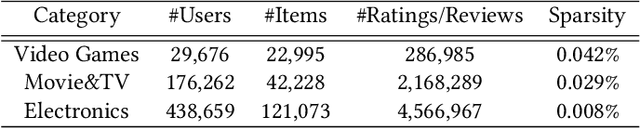

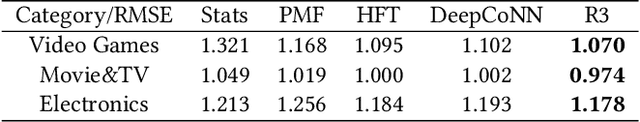

Abstract:In recent years, text-aware collaborative filtering methods have been proposed to address essential challenges in recommendations such as data sparsity, cold start problem, and long-tail distribution. However, many of these text-oriented methods rely heavily on the availability of text information for every user and item, which obviously does not hold in real-world scenarios. Furthermore, specially designed network structures for text processing are highly inefficient for on-line serving and are hard to integrate into current systems. In this paper, we propose a flexible neural recommendation framework, named Review Regularized Recommendation, short as R3. It consists of a neural collaborative filtering part that focuses on prediction output, and a text processing part that serves as a regularizer. This modular design incorporates text information as richer data sources in the training phase while being highly friendly for on-line serving as it needs no on-the-fly text processing in serving time. Our preliminary results show that by using a simple text processing approach, it could achieve better prediction performance than state-of-the-art text-aware methods.

Streaming Probabilistic Deep Tensor Factorization

Jul 14, 2020

Abstract:Despite the success of existing tensor factorization methods, most of them conduct a multilinear decomposition, and rarely exploit powerful modeling frameworks, like deep neural networks, to capture a variety of complicated interactions in data. More important, for highly expressive, deep factorization, we lack an effective approach to handle streaming data, which are ubiquitous in real-world applications. To address these issues, we propose SPIDER, a Streaming ProbabilistIc Deep tEnsoR factorization method. We first use Bayesian neural networks (NNs) to construct a deep tensor factorization model. We assign a spike-and-slab prior over the NN weights to encourage sparsity and prevent overfitting. We then use Taylor expansions and moment matching to approximate the posterior of the NN output and calculate the running model evidence, based on which we develop an efficient streaming posterior inference algorithm in the assumed-density-filtering and expectation propagation framework. Our algorithm provides responsive incremental updates for the posterior of the latent factors and NN weights upon receiving new tensor entries, and meanwhile select and inhibit redundant/useless weights. We show the advantages of our approach in four real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge