Zhentao Huang

Textured-GS: Gaussian Splatting with Spatially Defined Color and Opacity

Jul 13, 2024

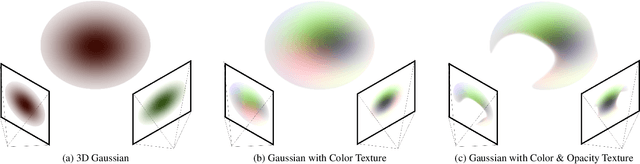

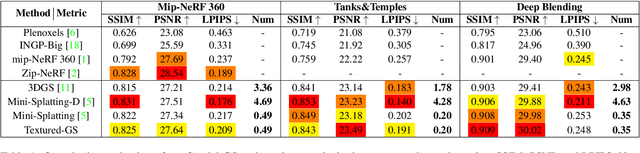

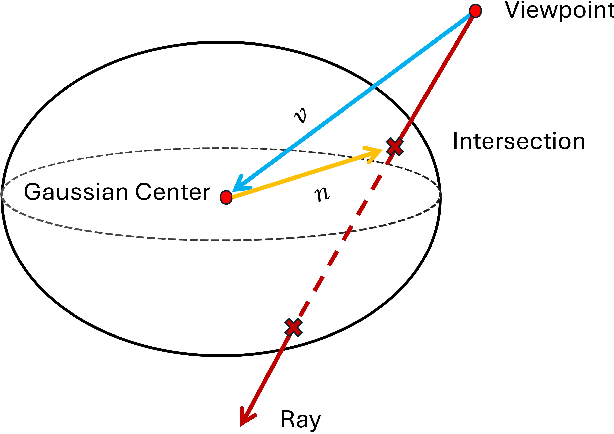

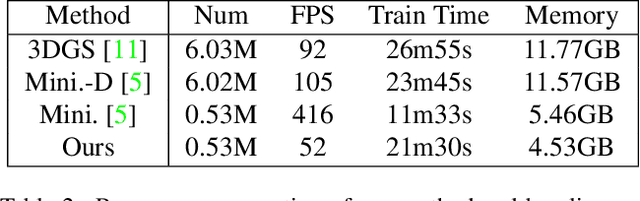

Abstract:In this paper, we introduce Textured-GS, an innovative method for rendering Gaussian splatting that incorporates spatially defined color and opacity variations using Spherical Harmonics (SH). This approach enables each Gaussian to exhibit a richer representation by accommodating varying colors and opacities across its surface, significantly enhancing rendering quality compared to traditional methods. To demonstrate the merits of our approach, we have adapted the Mini-Splatting architecture to integrate textured Gaussians without increasing the number of Gaussians. Our experiments across multiple real-world datasets show that Textured-GS consistently outperforms both the baseline Mini-Splatting and standard 3DGS in terms of visual fidelity. The results highlight the potential of Textured-GS to advance Gaussian-based rendering technologies, promising more efficient and high-quality scene reconstructions.

SealD-NeRF: Interactive Pixel-Level Editing for Dynamic Scenes by Neural Radiance Fields

Feb 21, 2024

Abstract:The widespread adoption of implicit neural representations, especially Neural Radiance Fields (NeRF), highlights a growing need for editing capabilities in implicit 3D models, essential for tasks like scene post-processing and 3D content creation. Despite previous efforts in NeRF editing, challenges remain due to limitations in editing flexibility and quality. The key issue is developing a neural representation that supports local edits for real-time updates. Current NeRF editing methods, offering pixel-level adjustments or detailed geometry and color modifications, are mostly limited to static scenes. This paper introduces SealD-NeRF, an extension of Seal-3D for pixel-level editing in dynamic settings, specifically targeting the D-NeRF network. It allows for consistent edits across sequences by mapping editing actions to a specific timeframe, freezing the deformation network responsible for dynamic scene representation, and using a teacher-student approach to integrate changes.

Visibility-Aware Pixelwise View Selection for Multi-View Stereo Matching

Feb 14, 2023Abstract:The performance of PatchMatch-based multi-view stereo algorithms depends heavily on the source views selected for computing matching costs. Instead of modeling the visibility of different views, most existing approaches handle occlusions in an ad-hoc manner. To address this issue, we propose a novel visibility-guided pixelwise view selection scheme in this paper. It progressively refines the set of source views to be used for each pixel in the reference view based on visibility information provided by already validated solutions. In addition, the Artificial Multi-Bee Colony (AMBC) algorithm is employed to search for optimal solutions for different pixels in parallel. Inter-colony communication is performed both within the same image and among different images. Fitness rewards are added to validated and propagated solutions, effectively enforcing the smoothness of neighboring pixels and allowing better handling of textureless areas. Experimental results on the DTU dataset show our method achieves state-of-the-art performance among non-learning-based methods and retrieves more details in occluded and low-textured regions.

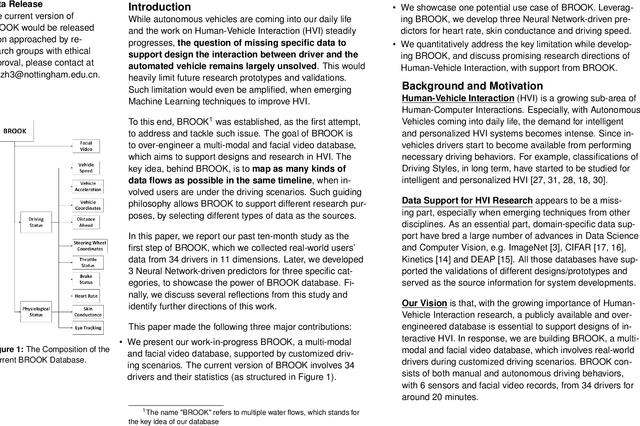

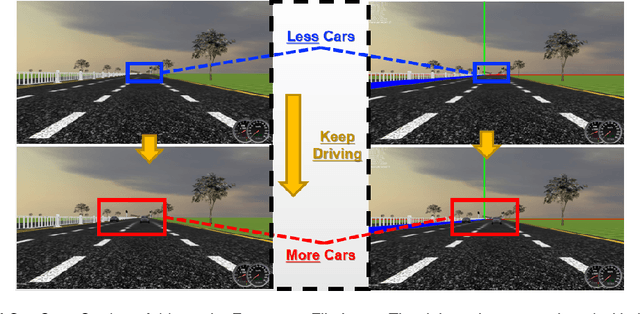

Building BROOK: A Multi-modal and Facial Video Database for Human-Vehicle Interaction Research

May 19, 2020

Abstract:With the growing popularity of Autonomous Vehicles, more opportunities have bloomed in the context of Human-Vehicle Interactions. However, the lack of comprehensive and concrete database support for such specific use case limits relevant studies in the whole design spaces. In this paper, we present our work-in-progress BROOK, a public multi-modal database with facial video records, which could be used to characterize drivers' affective states and driving styles. We first explain how we over-engineer such database in details, and what we have gained through a ten-month study. Then we showcase a Neural Network-based predictor, leveraging BROOK, which supports multi-modal prediction (including physiological data of heart rate and skin conductance and driving status data of speed)through facial videos. Finally, we discuss related issues when building such a database and our future directions in the context of BROOK. We believe BROOK is an essential building block for future Human-Vehicle Interaction Research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge