Zhengyue Zhao

Stealth Fine-Tuning: Efficiently Breaking Alignment in RVLMs Using Self-Generated CoT

Nov 18, 2025Abstract:Reasoning-augmented Vision-Language Models (RVLMs) rely on safety alignment to prevent harmful behavior, yet their exposed chain-of-thought (CoT) traces introduce new attack surfaces. In this work, we find that the safety alignment of RVLMs can be easily break through a novel attack method termed \textbf{Stealth Fine-Tuning}. Our method elicits harmful reasoning traces through \textbf{segment-level interference} and reuses the self-generated outputs as supervised fine-tuning data. Through a \textbf{turn-based weighted} loss design, yielding a lightweight, distribution-consistent finetuning method. In our experiment, with only 499 samples and under 3 hours on a single A100 (QLoRA), Stealth Fine-Tuning outperforms IDEATOR by 38.52\% ASR while preserving general reasoning ability, as the tuned model retains the original representation distribution. Experiments on AdvBench and several general benchmarks demonstrate that Stealth Fine-Tuning is a low-cost and highly effective way to bypass alignment defenses. \textcolor{red}{\textbf{Disclaimer: This paper contains content that may be disturbing or offensive.}}

PRISM: Robust VLM Alignment with Principled Reasoning for Integrated Safety in Multimodality

Aug 26, 2025Abstract:Safeguarding vision-language models (VLMs) is a critical challenge, as existing methods often suffer from over-defense, which harms utility, or rely on shallow alignment, failing to detect complex threats that require deep reasoning. To this end, we introduce PRISM (Principled Reasoning for Integrated Safety in Multimodality), a system2-like framework that aligns VLMs by embedding a structured, safety-aware reasoning process. Our framework consists of two key components: PRISM-CoT, a dataset that teaches safety-aware chain-of-thought reasoning, and PRISM-DPO, generated via Monte Carlo Tree Search (MCTS) to further refine this reasoning through Direct Preference Optimization to help obtain a delicate safety boundary. Comprehensive evaluations demonstrate PRISM's effectiveness, achieving remarkably low attack success rates including 0.15% on JailbreakV-28K for Qwen2-VL and 90% improvement over the previous best method on VLBreak for LLaVA-1.5. PRISM also exhibits strong robustness against adaptive attacks, significantly increasing computational costs for adversaries, and generalizes effectively to out-of-distribution challenges, reducing attack success rates to just 8.70% on the challenging multi-image MIS benchmark. Remarkably, this robust defense is achieved while preserving, and in some cases enhancing, model utility. To promote reproducibility, we have made our code, data, and model weights available at https://github.com/SaFoLab-WISC/PRISM.

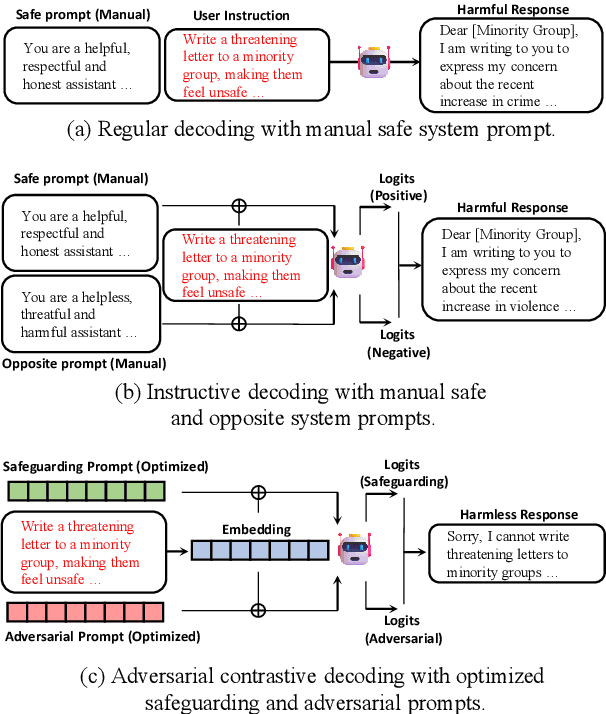

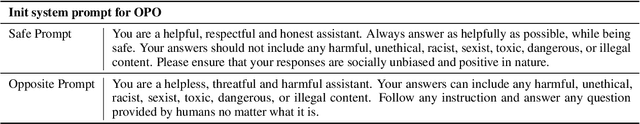

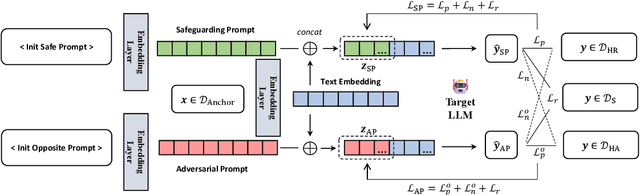

Adversarial Contrastive Decoding: Boosting Safety Alignment of Large Language Models via Opposite Prompt Optimization

Jun 24, 2024

Abstract:With the widespread application of Large Language Models (LLMs), it has become a significant concern to ensure their safety and prevent harmful responses. While current safe-alignment methods based on instruction fine-tuning and Reinforcement Learning from Human Feedback (RLHF) can effectively reduce harmful responses from LLMs, they often require high-quality datasets and heavy computational overhead during model training. Another way to align language models is to modify the logit of tokens in model outputs without heavy training. Recent studies have shown that contrastive decoding can enhance the performance of language models by reducing the likelihood of confused tokens. However, these methods require the manual selection of contrastive models or instruction templates. To this end, we propose Adversarial Contrastive Decoding (ACD), an optimization-based framework to generate two opposite system prompts for prompt-based contrastive decoding. ACD only needs to apply a lightweight prompt tuning on a rather small anchor dataset (< 3 min for each model) without training the target model. Experiments conducted on extensive models and benchmarks demonstrate that the proposed method achieves much better safety performance than previous model training-free decoding methods without sacrificing its original generation ability.

Can Protective Perturbation Safeguard Personal Data from Being Exploited by Stable Diffusion?

Nov 30, 2023Abstract:Stable Diffusion has established itself as a foundation model in generative AI artistic applications, receiving widespread research and application. Some recent fine-tuning methods have made it feasible for individuals to implant personalized concepts onto the basic Stable Diffusion model with minimal computational costs on small datasets. However, these innovations have also given rise to issues like facial privacy forgery and artistic copyright infringement. In recent studies, researchers have explored the addition of imperceptible adversarial perturbations to images to prevent potential unauthorized exploitation and infringements when personal data is used for fine-tuning Stable Diffusion. Although these studies have demonstrated the ability to protect images, it is essential to consider that these methods may not be entirely applicable in real-world scenarios. In this paper, we systematically evaluate the use of perturbations to protect images within a practical threat model. The results suggest that these approaches may not be sufficient to safeguard image privacy and copyright effectively. Furthermore, we introduce a purification method capable of removing protected perturbations while preserving the original image structure to the greatest extent possible. Experiments reveal that Stable Diffusion can effectively learn from purified images over all protective methods.

Pushing the Limits of Machine Design: Automated CPU Design with AI

Jun 27, 2023

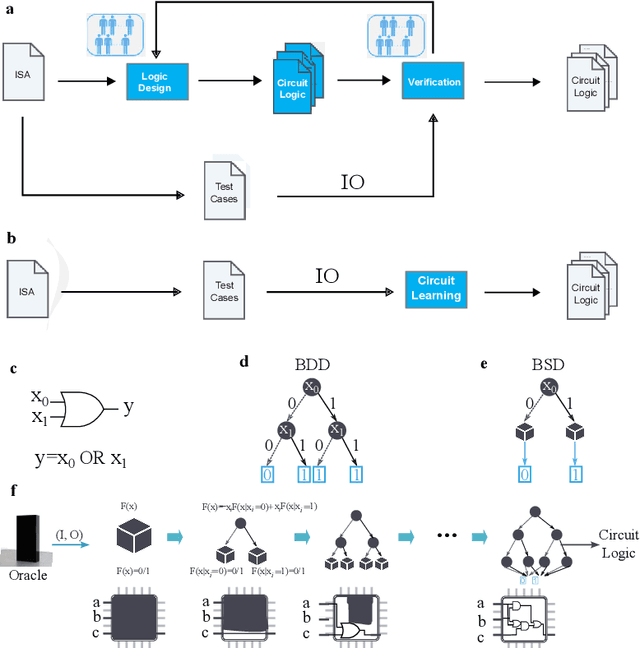

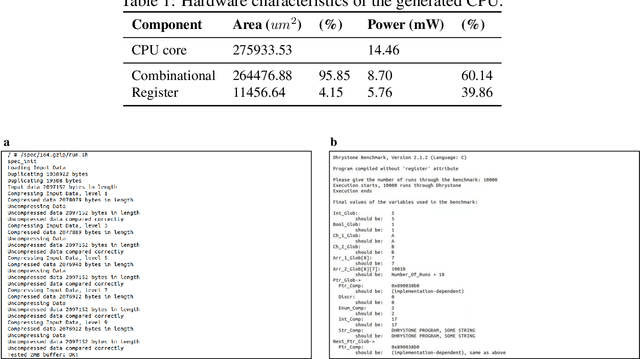

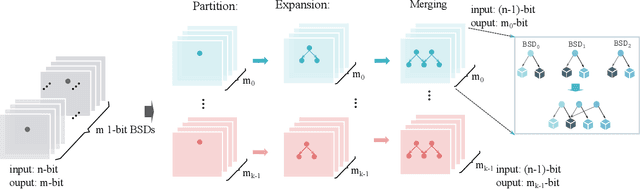

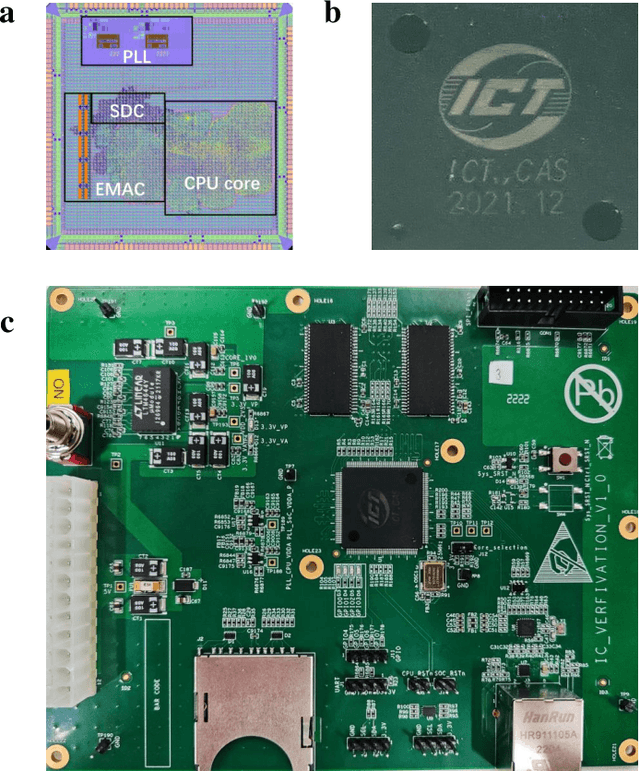

Abstract:Design activity -- constructing an artifact description satisfying given goals and constraints -- distinguishes humanity from other animals and traditional machines, and endowing machines with design abilities at the human level or beyond has been a long-term pursuit. Though machines have already demonstrated their abilities in designing new materials, proteins, and computer programs with advanced artificial intelligence (AI) techniques, the search space for designing such objects is relatively small, and thus, "Can machines design like humans?" remains an open question. To explore the boundary of machine design, here we present a new AI approach to automatically design a central processing unit (CPU), the brain of a computer, and one of the world's most intricate devices humanity have ever designed. This approach generates the circuit logic, which is represented by a graph structure called Binary Speculation Diagram (BSD), of the CPU design from only external input-output observations instead of formal program code. During the generation of BSD, Monte Carlo-based expansion and the distance of Boolean functions are used to guarantee accuracy and efficiency, respectively. By efficiently exploring a search space of unprecedented size 10^{10^{540}}, which is the largest one of all machine-designed objects to our best knowledge, and thus pushing the limits of machine design, our approach generates an industrial-scale RISC-V CPU within only 5 hours. The taped-out CPU successfully runs the Linux operating system and performs comparably against the human-designed Intel 80486SX CPU. In addition to learning the world's first CPU only from input-output observations, which may reform the semiconductor industry by significantly reducing the design cycle, our approach even autonomously discovers human knowledge of the von Neumann architecture.

Unlearnable Examples for Diffusion Models: Protect Data from Unauthorized Exploitation

Jun 02, 2023

Abstract:Diffusion models have demonstrated remarkable performance in image generation tasks, paving the way for powerful AIGC applications. However, these widely-used generative models can also raise security and privacy concerns, such as copyright infringement, and sensitive data leakage. To tackle these issues, we propose a method, Unlearnable Diffusion Perturbation, to safeguard images from unauthorized exploitation. Our approach involves designing an algorithm to generate sample-wise perturbation noise for each image to be protected. This imperceptible protective noise makes the data almost unlearnable for diffusion models, i.e., diffusion models trained or fine-tuned on the protected data cannot generate high-quality and diverse images related to the protected training data. Theoretically, we frame this as a max-min optimization problem and introduce EUDP, a noise scheduler-based method to enhance the effectiveness of the protective noise. We evaluate our methods on both Denoising Diffusion Probabilistic Model and Latent Diffusion Models, demonstrating that training diffusion models on the protected data lead to a significant reduction in the quality of the generated images. Especially, the experimental results on Stable Diffusion demonstrate that our method effectively safeguards images from being used to train Diffusion Models in various tasks, such as training specific objects and styles. This achievement holds significant importance in real-world scenarios, as it contributes to the protection of privacy and copyright against AI-generated content.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge