Zhengran Ji

Pref-GUIDE: Continual Policy Learning from Real-Time Human Feedback via Preference-Based Learning

Aug 10, 2025Abstract:Training reinforcement learning agents with human feedback is crucial when task objectives are difficult to specify through dense reward functions. While prior methods rely on offline trajectory comparisons to elicit human preferences, such data is unavailable in online learning scenarios where agents must adapt on the fly. Recent approaches address this by collecting real-time scalar feedback to guide agent behavior and train reward models for continued learning after human feedback becomes unavailable. However, scalar feedback is often noisy and inconsistent, limiting the accuracy and generalization of learned rewards. We propose Pref-GUIDE, a framework that transforms real-time scalar feedback into preference-based data to improve reward model learning for continual policy training. Pref-GUIDE Individual mitigates temporal inconsistency by comparing agent behaviors within short windows and filtering ambiguous feedback. Pref-GUIDE Voting further enhances robustness by aggregating reward models across a population of users to form consensus preferences. Across three challenging environments, Pref-GUIDE significantly outperforms scalar-feedback baselines, with the voting variant exceeding even expert-designed dense rewards. By reframing scalar feedback as structured preferences with population feedback, Pref-GUIDE offers a scalable and principled approach for harnessing human input in online reinforcement learning.

GUIDE: Real-Time Human-Shaped Agents

Oct 19, 2024

Abstract:The recent rapid advancement of machine learning has been driven by increasingly powerful models with the growing availability of training data and computational resources. However, real-time decision-making tasks with limited time and sparse learning signals remain challenging. One way of improving the learning speed and performance of these agents is to leverage human guidance. In this work, we introduce GUIDE, a framework for real-time human-guided reinforcement learning by enabling continuous human feedback and grounding such feedback into dense rewards to accelerate policy learning. Additionally, our method features a simulated feedback module that learns and replicates human feedback patterns in an online fashion, effectively reducing the need for human input while allowing continual training. We demonstrate the performance of our framework on challenging tasks with sparse rewards and visual observations. Our human study involving 50 subjects offers strong quantitative and qualitative evidence of the effectiveness of our approach. With only 10 minutes of human feedback, our algorithm achieves up to 30% increase in success rate compared to its RL baseline.

Enabling Multi-Robot Collaboration from Single-Human Guidance

Sep 30, 2024

Abstract:Learning collaborative behaviors is essential for multi-agent systems. Traditionally, multi-agent reinforcement learning solves this implicitly through a joint reward and centralized observations, assuming collaborative behavior will emerge. Other studies propose to learn from demonstrations of a group of collaborative experts. Instead, we propose an efficient and explicit way of learning collaborative behaviors in multi-agent systems by leveraging expertise from only a single human. Our insight is that humans can naturally take on various roles in a team. We show that agents can effectively learn to collaborate by allowing a human operator to dynamically switch between controlling agents for a short period and incorporating a human-like theory-of-mind model of teammates. Our experiments showed that our method improves the success rate of a challenging collaborative hide-and-seek task by up to 58$% with only 40 minutes of human guidance. We further demonstrate our findings transfer to the real world by conducting multi-robot experiments.

CREW: Facilitating Human-AI Teaming Research

Jul 31, 2024

Abstract:With the increasing deployment of artificial intelligence (AI) technologies, the potential of humans working with AI agents has been growing at a great speed. Human-AI teaming is an important paradigm for studying various aspects when humans and AI agents work together. The unique aspect of Human-AI teaming research is the need to jointly study humans and AI agents, demanding multidisciplinary research efforts from machine learning to human-computer interaction, robotics, cognitive science, neuroscience, psychology, social science, and complex systems. However, existing platforms for Human-AI teaming research are limited, often supporting oversimplified scenarios and a single task, or specifically focusing on either human-teaming research or multi-agent AI algorithms. We introduce CREW, a platform to facilitate Human-AI teaming research and engage collaborations from multiple scientific disciplines, with a strong emphasis on human involvement. It includes pre-built tasks for cognitive studies and Human-AI teaming with expandable potentials from our modular design. Following conventional cognitive neuroscience research, CREW also supports multimodal human physiological signal recording for behavior analysis. Moreover, CREW benchmarks real-time human-guided reinforcement learning agents using state-of-the-art algorithms and well-tuned baselines. With CREW, we were able to conduct 50 human subject studies within a week to verify the effectiveness of our benchmark.

Electron energy loss spectroscopy database synthesis and automation of core-loss edge recognition by deep-learning neural networks

Sep 26, 2022

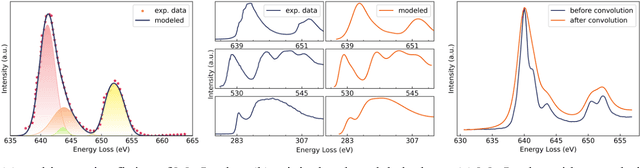

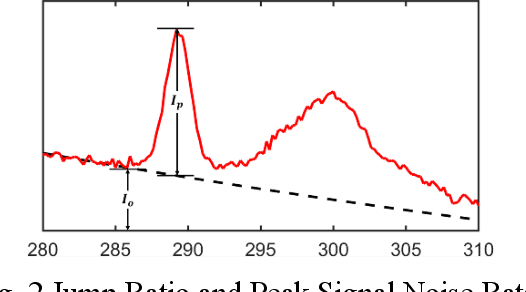

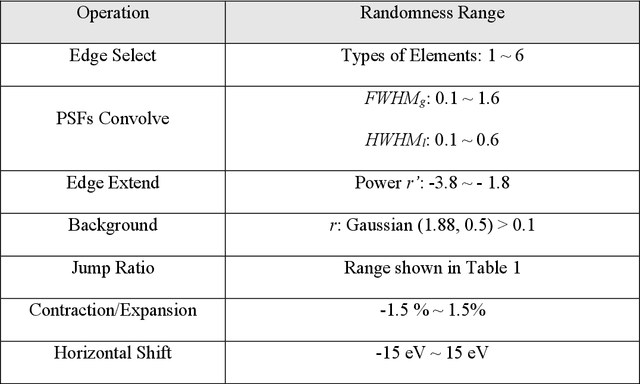

Abstract:The ionization edges encoded in the electron energy loss spectroscopy (EELS) spectra enable advanced material analysis including composition analyses and elemental quantifications. The development of the parallel EELS instrument and fast, sensitive detectors have greatly improved the acquisition speed of EELS spectra. However, the traditional way of core-loss edge recognition is experience based and human labor dependent, which limits the processing speed. So far, the low signal-noise ratio and the low jump ratio of the core-loss edges on the raw EELS spectra have been challenging for the automation of edge recognition. In this work, a convolutional-bidirectional long short-term memory neural network (CNN-BiLSTM) is proposed to automate the detection and elemental identification of core-loss edges from raw spectra. An EELS spectral database is synthesized by using our forward model to assist in the training and validation of the neural network. To make the synthesized spectra resemble the real spectra, we collected a large library of experimentally acquired EELS core edges. In synthesize the training library, the edges are modeled by fitting the multi-gaussian model to the real edges from experiments, and the noise and instrumental imperfectness are simulated and added. The well-trained CNN-BiLSTM network is tested against both the simulated spectra and real spectra collected from experiments. The high accuracy of the network, 94.9 %, proves that, without complicated preprocessing of the raw spectra, the proposed CNN-BiLSTM network achieves the automation of core-loss edge recognition for EELS spectra with high accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge